Automatically correcting phrasing and misrecognized words in speech-to-text captions by using a script

| speechtotext, subed, emacs

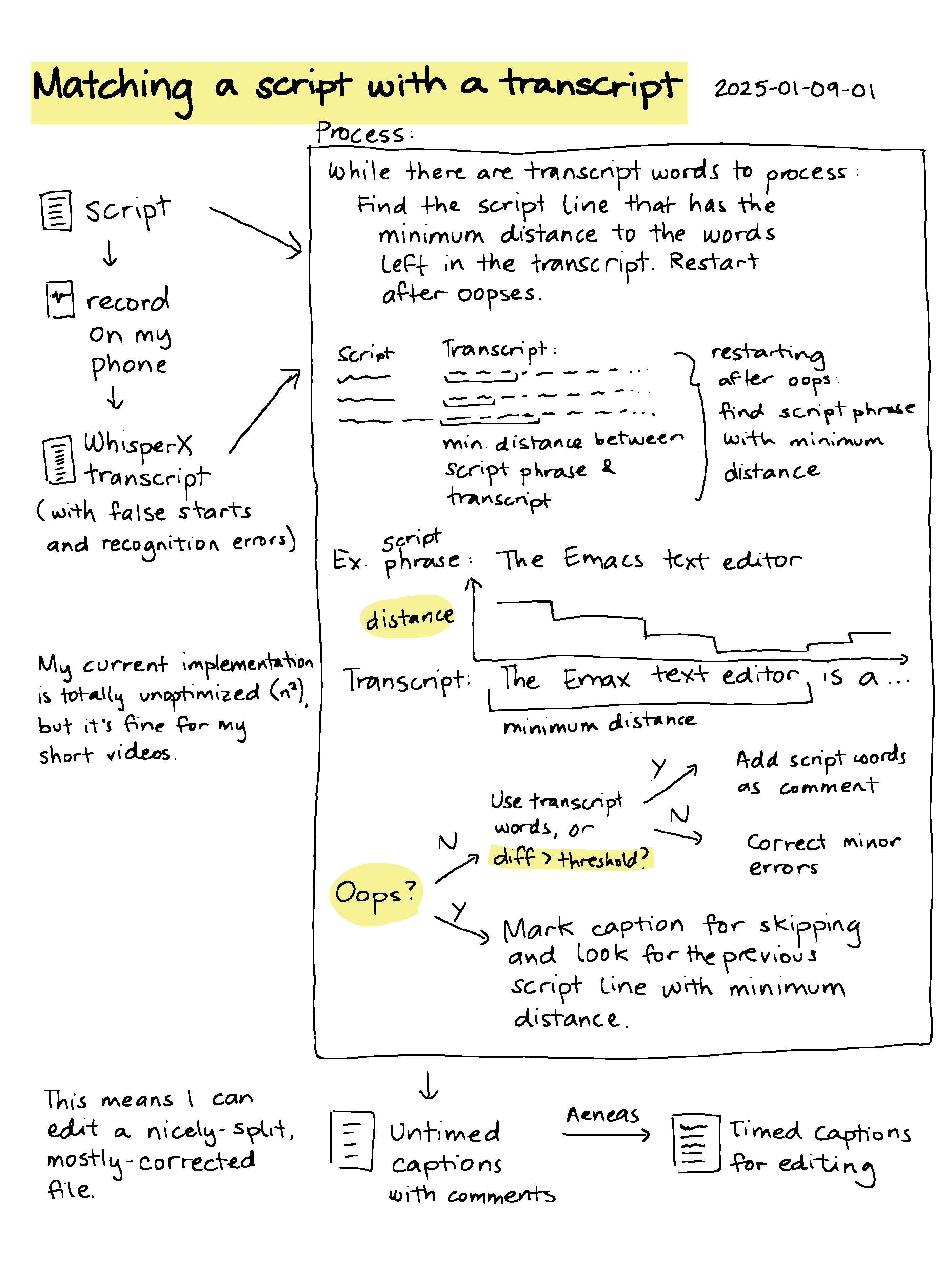

I usually write my scripts with phrases that could be turned into the subtitles. I figured I might as well combine that information with the WhisperX transcripts which I use to cut out my false starts and oopses. To do that, I use the string-distance function, which calculates how similar strings are, based on the Levenshtein [distance] algorithm. If I take each line of the script and compare it with the list of words in the transcription, I can add one transcribed word at a time, until I find the number with the minimum distance from my current script phrase. This lets me approximately match strings despite misrecognized words. I use oopses to signal mistakes. When I detect those, I look for the previous script line that is closest to the words I restart with. I can then skip the previous lines automatically. When the script and the transcript are close, I can automatically correct the words. If not, I can use comments to easily compare them at that point. Even though I haven't optimized anything, it runs well enough for my short videos. With these subtitles as a base, I can get timestamps with subed-align and then there's just the matter of tweaking the times and adding the visuals.

Text from sketch

Matching a script with a transcript 2025-01-09-01

- script

- record on my phone

- WhisperX transcript (with false starts and recognition errors)

My current implementation is totally unoptimized (n²) but it's fine for short videos.

Process:

- While there are transcript words to process

- Find the script line that has the minimum distance to the words left in the transcript. restart after oopses

- Script

- Transcript: min. distance between script phrase & transcript

- Restarting after oops: find script phrase with minimum distance

- Ex. script phrase: The Emacs text editor

- Transcript: The Emax text editor is a…

- Bar graph of distance decreasing, and then increasing again

- Minimum distance

- Oops?

- N: Use transcript words, or diff > threshold?

- Y: Add script words as comment

- N: Correct minor errors

- Y: Mark caption for skipping and look for the previous script line with minimum distance.

- N: Use transcript words, or diff > threshold?

Result:

- Untimed captions with comments

- Aeneas

- Timed captions for editing

This means I can edit a nicely-split, mostly-corrected file.

I've included the links to various files below so you can get a sense of how it works. Let's focus on an excerpt from the middle of my script file.

it runs well enough for my short videos. With these subtitles as a base, I can get timestamps with subed-align

When I call WhisperX with large-v2 as the model and --max_line_width 50 --segment_resolution chunk --max_line_count 1 as the options, it produces these captions corresponding to that part of the script.

01:25.087 --> 01:29.069 runs well enough for my short videos. With these subtitles 01:29.649 --> 01:32.431 as a base, I can get... Oops. With these subtitles as a base, I 01:33.939 --> 01:41.205 can get timestamps with subedeline, and then there's just

Running subed-word-data-use-script-file results in a VTT file containing this excerpt:

00:00:00.000 --> 00:00:00.000 it runs well enough for my short videos. NOTE #+SKIP 00:00:00.000 --> 00:00:00.000 With these subtitles as a base, NOTE #+SKIP 00:00:00.000 --> 00:00:00.000 I can get... Oops. 00:00:00.000 --> 00:00:00.000 With these subtitles as a base, NOTE #+TRANSCRIPT: I can get timestamps with subedeline, #+DISTANCE: 0.14 00:00:00.000 --> 00:00:00.000 I can get timestamps with subed-align

There are no timestamps yet, but subed-align can add them. Because subed-align uses the Aeneas forced alignment tool to figure out timestamps by lining up waveforms for speech-synthesized text with the recorded audio, it's important to keep the false starts in the subtitle file. Once subed-align has filled in the timestamps and I've tweaked the timestamps by using the waveforms, I can use subed-record to create an audio file that omits the subtitles that have #+SKIP comments.

The code is available as subed-word-data-use-script-file in subed-word-data.el. I haven't released a new version of subed.el yet, but you can get it from the repository.

- Script as a text file exported using the Supernote's handwriting recognition

- VTT produced by WhisperX

- VTT that was automatically split and commented using subed-word-data-use-script-file

In addition to making my editing workflow a little more convenient, I think it might also come in handy for applying the segmentation from tools like sub-seg or lachesis to captions that might already have been edited by volunteers. (I got sub-seg working on my system, but I haven't figured out lachesis.) If I call subed-word-data-use-script-file with the universal prefix arg C-u, it should set keep-transcript-words to true and keep any corrections we've already made to the caption text while still approximately matching and using the other file's segments. Neatly-segmented captions might be more pleasant to read and may require less cognitive load.

There's probably some kind of fancy Python project that already does this kind of false start identification and script reconciliation. I just did it in Emacs Lisp because that was handy and because that way, I can make it part of subed. If you know of a more robust or full-featured approach, please let me know!

1 comment

John Bernstein

2025-01-11T18:06:21ZFantastic post. I especially appreciated your sketch-note. The visual really helps explain what you're doing.