Improving subed-vtt parsing; using dedicated windows in Emacs; training my intuition

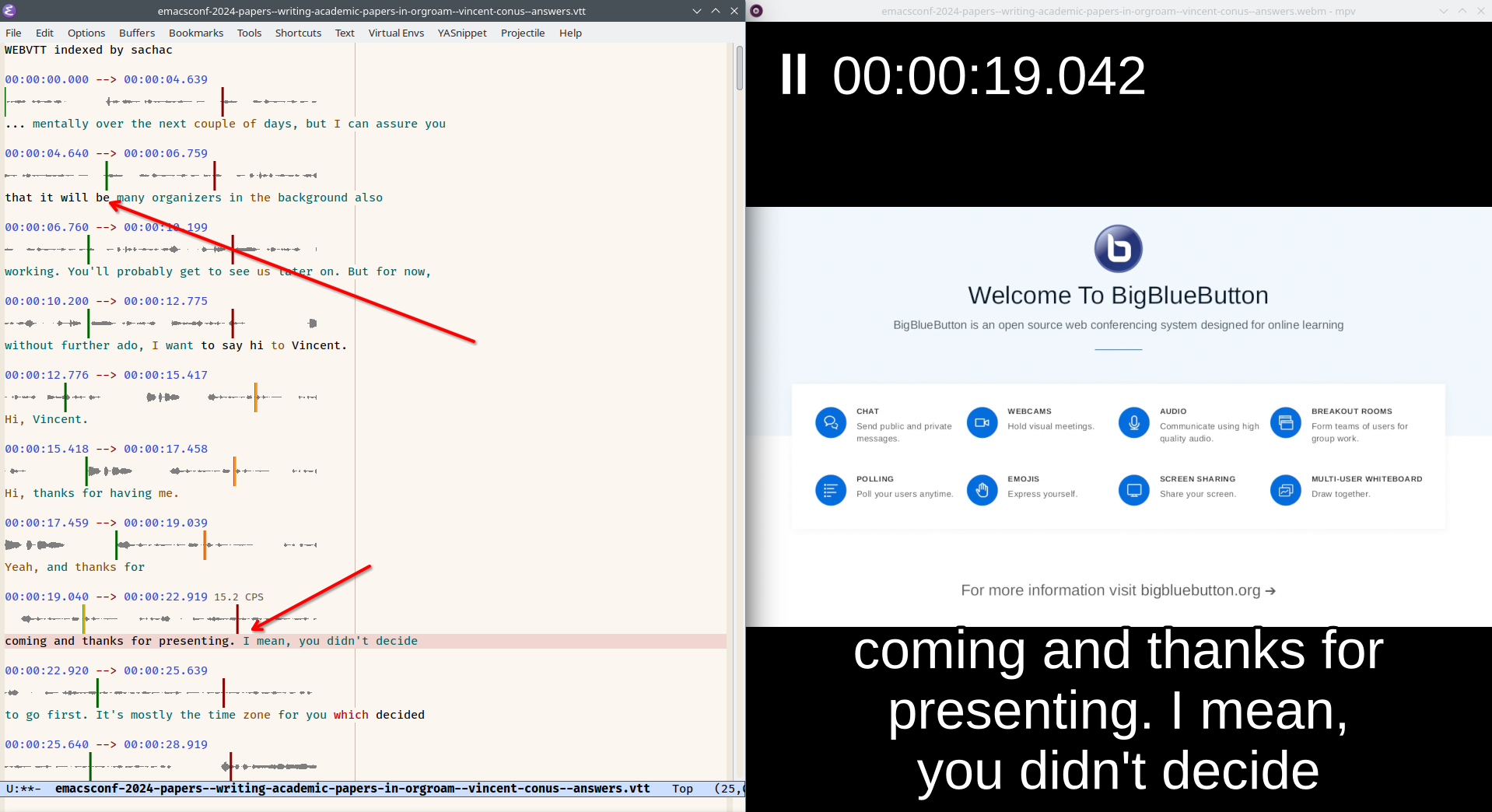

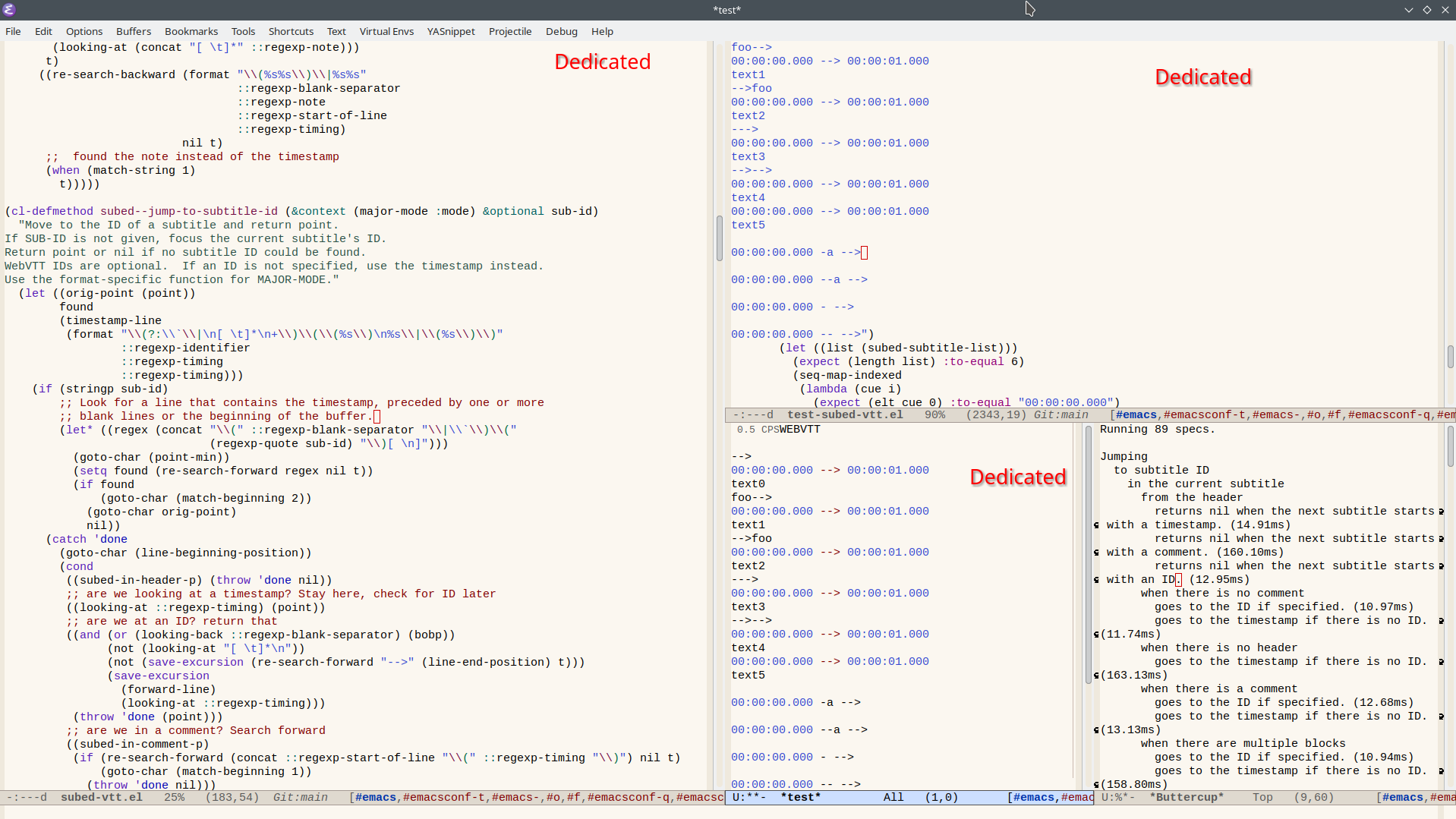

| emacs, subedWhile putting together some notes on how to use subed.el with auto-generated YouTube captions, I decided to get subed-word-data working with the Youtube VTT format. The sample file I downloaded from one of my YouTube videos had a cue whose text started with a blank line, so I ended up redoing the way subed-vtt.el parsed cues. Twice, actually. The first time, I changed it to handle multiple blocks in cue text (separated by blank lines). Then I came across this test suite for WebVTT parsing and found out that the timing line for a cue doesn't have to be preceded by a blank line, so then I needed to change the VTT parsing again.

Fortunately, I inherited a large Buttercup test suite from subed.el's original author. I've been adding to it over the years. As I learned more about how I wanted subed.el to behave, I added more cases. Then I worked on shifting the code to behave the way I wanted it to. At first, I didn't quite understand what I wanted the code to do, but as I pinned down more test cases, I was able to figure it out.

This was the first time I used

toggle-window-dedicated (C-x w d) extensively.

This function keeps the same buffer displayed in

the window instead of letting Emacs Lisp functions

replace it. First, I set up a large window for my

subed-vtt.el. I split the other side into a

smaller window for my test-subed-vtt.el, another

window for a temporary VTT file, and a window for

output from whatever command I'm running. I set

most of the windows to dedicated except for my

temporary output window. I also enabled

winner-mode just in case I messed things up so

that I could restore with winner-undo.

Dedicating the windows meant I didn't have to keep

fussing around with my windows and buffers to get

things back to where I wanted them to be. I did

use C-x o (other-window) a lot, so maybe it'll

be worth getting the hang of ace-window.

Sometimes I wanted to focus on one of those small windows. prot/window-single-toggle was helpful for maximizing a window and then returning to the previous configuration.

I mostly evaluated or edebugged my code and then

used my-buttercup-run-dwim to run a suite in my

test-subed-vtt.el. sp-backward-up-sexp and

sp-narrow-to-sexp were also helpful for

navigating the suites and focusing on whatever I

was working on.

I'm looking forward to exploring the other test cases from that repository. It feels good to get better as a coder.

I just finished reading Mathematica: a Secret World of Imagination and Curiosity, by David Bessis. The idea of training your intuition echoed in my mind as I wrote test cases and changed the code. I started to look forward to the gaps between my understanding of the spec and my understanding of the test cases, and the gaps between the test cases and my implementation. It felt almost like a conversation. Sometimes it was hard to translate an idea into code. I felt myself getting muddled and turned around. Whenever I noticed that, I just had to back up and start from something I understood, figure out what I was uncertain about, and then go from there.

I like the way that subed.el gives me tools for thinking about text, times, and metadata together. That abstraction has been helpful for editing audio and making videos. The more solid I can make it, the easier it will be to imagine other things that use those ideas.