Large language models and me

| ai, geekSummary (1895 words): There's a lot of hype around large language models (LLMs) or generative pre-trained transformers (GPTs) like ChatGPT. I'm still trying to figure out how I can use them.

Text from sketch

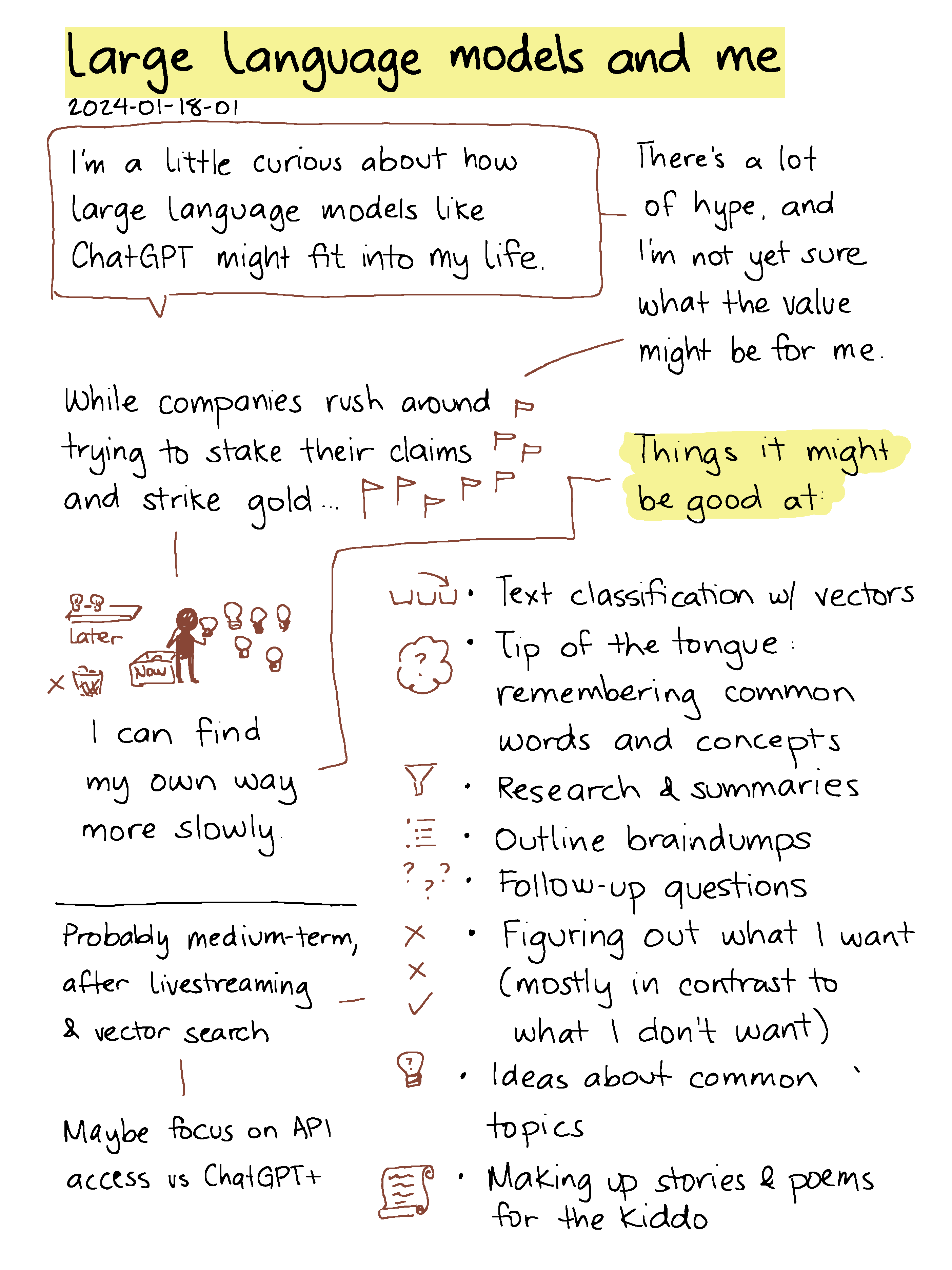

I'm a little curious about how large language models like ChatGPT might fit into my life. There's a lot of hype, and I'm not yet sure what the value might be for me. While companies rush around trying to stake their claims and strike gold, I can find my own way more slowly.

Things it might be good at:

- text classification with vectors

- tip of the tongue: remembering common words and concepts

- research and summaries

- outline braindumps

- follow-up questions

- figuring out what I want (mostly in contrast to what I don't want)

- ideas about common topics

- making up stories and poems for the kiddo

Probably medium-term, after livestreaming and vector search.

Maybe focus on API access versus ChatGPT Plus.

It's a bit of a gold rush in the tech industry at the moment with all sorts of companies scrambling to figure out generative artificial intelligence and large language models. I feel like I don't quite line up with the personas these companies are building for. I don't want autogenerated marketing fluff for my newsletter or social media posts, and I'm still a little iffy on code. I probably need to go figure things out for myself.

I've built a couple of question-answering prototypes using retrieval-augmented generation with LangChain or Llama Index for my consulting clients, and I've started experimenting with using HuggingFace embeddings to do vector search across my blog post and sketchnote titles. I'm also curious about how other Emacs geeks have been experimenting with large language models, such as Abhinav Tushar's EmacsConf 2023 talk on simplifying data visualization with Org Babel and Matplot, Andrew Hyatt's llm package for standardizing interfaces, and the AI category items in my Emacs News.

W- has been experimenting with ChatGPT Plus too. He finds that it's useful for summarizing videos and world news. The sequence of images it generated for a Vim GPT logo was hilariously off-base. Maybe I should mentally file it under entertainment instead of productivity, and anything actually useful can be a bonus.

I think that at the moment, GPTs generally do better when you keep them. Here are some quick notes:

Text classification with vectors

I categorize Emacs News links so that it's easy for people to find the topics they're interested in. Most of the time, a simple regular expression match is enough (ex: Org Mode, Denote, Magit). I can probably make a training data set from recent Emacs News posts so that I can use text classification libraries to suggest a category for links that aren't easily figure out with regular expressions.

Tip of the tongue

I think GPT is useful for figuring out the words to look for.

One of the challenges of learning more is figuring out the jargon, like learning how to use M-x apropos to look for things related to buffers. (Apropos, buffers; Emacs uses lots of weird words.) Even when you know the words, it can be hard to remember them: tip of the tongue is a thing, and it'll probably get harder as I get older. (Another good reason to exercise.)

It'll be handy if I can just vaguely describe something and then get the words to look for, or use vector search to find things based on similarity rather than exact matches.

Research and summarization

I appreciate that the web access in ChatGPT-4 and Microsoft Bing/Copilot allows me to get search results back without having to deal with all the generic SEO content, newsletter popups, and content paywalls that plague the modern web. Sometimes it will even analyze the information and put things into tables or outlines for me. Of course, it is also contributing to the problem by making it easier for people to churn out lots of generic SEO content or newsletter updates, so this feels somewhat self-defeating in the long run. We'll just have to see how it all works out.

I'm also curious about using it to summarize the videos I come across for Emacs News, although people usually set good titles and a quick skim of the transcript usually helps.

Outlines

I'm ambivalent about generating outlines for blog post ideas. The ones it comes up with from short prompts are pretty generic and intro-focused, and it feels easier to just start with my own outline.

Sample outline for a blog post idea

I. Introduction

- Personal Reflections

- Express curiosity about the potential of large language models in daily life.

- Discuss the hype around these models versus personal exploration.

- Setting the Stage

- Briefly mention the rise of companies in this domain and public interest.

II. Understanding Large Language Models

- What are Large Language Models?

- Definition and basic explanation.

- Brief history and evolution.

- ChatGPT: A Case Study

- Introduction to ChatGPT.

- How it differs from traditional models.

III. Practical Applications of ChatGPT

- Everyday Uses

- Text classification with vectors.

- Memory assistance: Remembering common words and concepts.

- Research and summaries.

- Creative Endeavors

- Generating stories and poems.

- Using ChatGPT for brainstorming and idea generation.

- Professional Scenarios

- Outlining and organizing thoughts.

- Follow-up questions and deep-dives into topics.

- Contrasting desires and needs: Figuring out preferences.

IV. Exploring Advanced Features and Accessibility

- Beyond Basic Use: Advanced Features

- Exploring vector search.

- Potential of ChatGPT in livestreaming contexts.

- Accessibility and Options for Users

- Comparing API access with ChatGPT Plus.

- Discussing the implications for different types of users.

V. Conclusion

- Personal Reflections Revisited

- Revisiting initial skepticism or curiosity.

- Reflections based on the exploration in the article.

- Looking Forward

- Potential future developments in large language models.

- Encouraging reader interaction: Inviting questions and comments.

The outlines it generates from my audio braindumps could be an interesting way to structure a large wall of text, if I could just get it to use more of my own words while still correcting misrecognized words.

Follow-up questions and ideas

I could give GPT a blog post or presentation draft and ask it to generate follow-up questions so that I can flesh ideas out further. Although usually adding more stuff isn't a problem for me–it's more like finding a good stopping point instead of getting tempted to squeeze one more hack in…

The kiddo sometimes needs a bit of prompting to elaborate on her writing homework. She responds well to me mind-mapping while we free-associate words, but sometimes it helps to have follow-up questions, and it works out much better when the computer's the one asking the follow-up questions instead of me.

Figuring out what I want to say and how

Sometimes I'm not happy with the way a sentence or paragraph flows. Metaphors can be helpful. Mixed metaphors are weird. Maybe a GPT can help me find a smoother way to explain things. Getting writing suggestions seems to be fine on a very small scale (phrases or sentences).

When I ask GPT to write longer things, then things get crazy. ChatGPT is pretty good at getting me to say "That totally doesn't sound like me, I'd better just write it myself," which seems like a common result.

AI style guide

I wanted to see if I could get it to sound more like me, so I went through Tiago Forte's tutorial for creating an AI style guide. I gave it a few of my recent blog posts (1, 2, 3, and 4) and asked it to summarize the resulting style guide concisely. I've included the details in case you want to get an idea of the kinds of things it makes, but it seems more suited to flattery than to practical application.

Sample analysis for one blog post

Voice and Tone

- Formality: The language is informal and conversational, combining personal anecdotes with a discussion of workflows and projects.

- Authoritative vs. Friendly: The tone is friendly and reflective, sharing personal experiences and thoughts in an approachable manner.

- Overall Tone: There is a sense of openness and exploration, with a focus on sharing personal learning and development processes.

Mood

- The mood is contemplative and optimistic, reflecting a mindset of constant learning and adaptation.

Sentence Structure

- Structure Type: A mix of compound and complex sentences, with a strong narrative flow that interweaves personal insights with technical descriptions.

- Length: The sentences vary in length, balancing brief statements with more elaborate explanations.

Transition Style

- Transitions are smooth and natural, often moving from a general idea to specific examples or from one aspect of a project to another.

- The writer uses a blend of direct transitions and narrative progression to guide the reader through different ideas and concepts.

Rhythm and Pacing

- The rhythm is consistent and engaging, with a good balance between detailed technical information and personal narrative.

- The pacing is moderate, allowing the reader to absorb the information without feeling rushed.

Signature Styles

- Recurring Phrases: Frequent use of terms related to personal productivity, technology tools, and creative processes.

- Unique Punctuation: Inclusion of lists and occasional asides to provide additional context or to elaborate on a point.

- Patterns/Repetitions: Regular mention of personal routines and habits, combined with technical tool usage and idea development.

Distinctive Writing Style Indicators

- A strong focus on personal productivity and idea management, combined with technical tool usage.

- Frequent reflection on personal learning processes and adaptation strategies.

- An engaging narrative style that seamlessly combines personal experiences with technical advice and insights.

- The inclusion of potential future improvements and explorations, indicating a forward-thinking and open-ended approach to problem-solving.

In summary, this text further cements the author's distinctive style of blending personal narrative with technical content. The writing is reflective, engaging, and informative, offering insights into personal workflows and idea management. The author's approachable tone and focus on continuous learning and adaptation make complex topics accessible and relatable to the reader.

Expanded AI style guide combining the guides from several posts

Voice and Tone

- Formality: Adopt a semi-formal language, leaning towards informal. Use a conversational style but include technical jargon where appropriate.

- Authoritative vs. Friendly: Aim for a friendly, informative tone. The writing should guide and assist, rather than command.

- Overall Tone: Maintain a helpful and instructive tone, with a focus on practical application and reader engagement.

Mood

- Keep the mood pragmatic and enthusiastic, especially when discussing the efficiency and utility of tools, methods, and personal projects.

Sentence Structure

- Structure Type: Utilize a mix of simple, compound, and complex sentences.

- Length: Vary sentence lengths. Use brief sentences for straightforward facts and longer ones for detailed technical explanations and personal anecdotes.

Transition Style

- Ensure transitions are smooth, moving from general ideas to specific examples. Use both direct transitions and subtle shifts in topic, based on the subject matter.

Rhythm and Pacing

- Maintain a moderately paced rhythm. Balance shorter sentences for clear facts and longer ones for in-depth explanations.

- Keep the narrative conversational and engaging, with a balance between technical details and personal reflections.

Signature Styles

- Recurring Phrases: Frequently use phrases like "Org Mode," "Emacs," and relevant technical terms.

- Unique Punctuation: Use parentheses for additional information and include code snippets where relevant.

- Patterns/Repetitions: Regularly follow the introduction of a concept with practical, often technical, examples.

Distinctive Writing Style Indicators

- Blend personal experience with technical instruction.

- Use specific examples, particularly code snippets, to illustrate points.

- Combine technical jargon with a conversational tone.

- Include practical applications and benefits of described methods or tools.

- Integrate personal anecdotes and reflections with technical content.

- Use interactive elements like reader questions and responses to foster a community-focused approach.

Additional Elements

- Focus on problem-solving and exploring new tools or methods.

- Include visual elements like screencasts or diagrams to enhance understanding.

- Reflect on personal learning processes and adaptation strategies.

- Consider potential future improvements and explorations in the subject matter.

When I asked it to summarize the style in a single paragraph, it said:

Use semi-formal, conversational language with occasional technical jargon. The tone should be friendly and informative, focusing on practical applications and engaging the reader. Include a mix of sentence structures, varying lengths for clarity and detail. Transition smoothly between general ideas and specific examples, maintaining a moderate pace with a balance of technical details and personal reflections. Frequently use phrases like 'Org Mode' and 'Emacs', and incorporate parentheses for additional information and code snippets to illustrate points. Blend personal experiences with technical instruction, using specific examples, especially code, to clarify concepts. The content should emphasize problem-solving, exploring new tools/methods, and personal learning processes, with visual elements like diagrams for better understanding. Aim to reflect on potential future improvements in the subject matter, fostering a community-focused approach with interactive elements like reader questions.

This feels related to the Barnum effect, which is the psychological phenomenon that explains how many people feel like very general statements (ex: horoscopes) are specific to them. I'm not sure I could give this to a human and get them to write like me enough that I'd want to use the text.

I've had a few opportunities to reflect on what does sound like me, mostly thanks to EmacsConf. For example, I definitely skew the e-mail personality away from polished to personal. (Do other conference organizers include "aaaah!" and stress levels in their e-mails?) I tell GPT to get rid of its adjectives. Even with this style guide and additional instructions like avoiding adjectives, I haven't gotten ChatGPT to cut down on this thing I can't quite figure out how to explain. The way it writes feels like it's just a bit much.

Generating text

Here's what it came up with when I asked it to use the style guide and write one paragraph about how the cat's been waking me up at 5:30 AM, the kiddo wants bedtime snuggles at 11:30 PM, and I can tell when the sleep deprivation's building up thanks to the brain hiccups I get when solving a Rubik's cube. It said:

Ah, the joys (and challenges) of balancing family life with personal interests and hobbies! Let me tell you, as someone who's navigated the tricky waters of being woken up by a cat at the ungodly hour of 5:30 AM and then, barely blinking away the sleep, being summoned for late-night snuggle sessions with the kiddo until 11:30 PM, I totally get the feeling of being perpetually sleep-deprived. This exhaustion hits hardest when I'm trying to solve Rubik's cubes. Normally, I'd zip through the algorithms with ease, but now, I find my brain hiccupping mid-solve, forcing me to backtrack and re-solve. It's like my mind is stuck in a loop!

Which… well… It's reassuring to know that even though I sometimes feel self-conscious about enthusiasm! exclamation marks! geeking out about stuff! I actually write in a more restrained manner compared to how ChatGPT thinks I write.

Also, ChatGPT enthusiastically went on to write two paragraphs making up more stuff out of whole cloth, which I will tuck behind a collapsible section because this is definitely more along the lines of entertainment rather than productivity at this time.

More from ChatGPT

But, you know, this is where my geek side comes to the rescue. I've started using Org Mode in Emacs (my go-to productivity tool) to manage my day better. How? Well, for starters, I've programmed a custom routine that reminds me to take short, but effective power naps. Think

(setq power-nap '(15 "minutes")). This tiny snippet of code, embedded in my daily schedule, works wonders! Plus, Org Mode's flexibility allows me to seamlessly integrate family time into my daily planner, ensuring I don't miss out on those precious moments. And when it comes to problem-solving, I've begun to treat my Rubik's cube solves like mini projects. I break them down into steps and track my progress (and missteps) in Emacs. This not only helps me understand where my sleep-deprived brain is tripping up but also serves as a great way to visualize my thought process (hello, mind maps!).Looking ahead, I'm considering exploring Emacs extensions that could further optimize my routine, maybe even one that predicts the best times for cube-solving based on my sleep patterns. It's all about finding that sweet spot where personal hobbies and family life can coexist without leaving me feeling like a zombie. Who knows, maybe I'll even code a mini-game in Emacs for my kiddo, blending playtime with my love for coding. The possibilities are endless, and that's the beauty of it. Any fellow Emacs users out there with similar challenges? How do you balance it all? Let's share some tips and tricks!

So yeah. Maybe not text generation just yet.

Ideas about common topics

My energy can get pretty low during the afternoon because of the aforementioned 5:30 cat alarm. It can help to have something suggest a list of ideas that we can pick from, like little Minecraft build challenges.

Making up stories and poems for the kiddo

It's a handy way to generate short stories and poems based on the kiddo's interests. I've been getting her to supply a prompt in either the SWBST story summary format ("Somebody… Wanted… But… So… Then") or the beginning/middle/end format so she does some of the work, and then GPT can deliver the payoff in the form of a fleshed-out story that invariably makes us giggle.

Wrapping up

This is more of a low-priority medium-term thing for me to explore, probably after I spend some more time getting the hang of livestreaming, transcripts, and vector search. I'll keep an eye out for interesting ideas.

I think I can access the GPT 4 model (or maybe gpt-4-1106-preview) on a pay-per-use basis through the API, which will probably work out better than paying USD 20/month for ChatGPT Plus. I can also check out Gemini Pro's API.

A cursory skim of r/LocalLLaMA gives me the impression that for reasonable non-toy use, local large language models require way better hardware than I have at the moment. Even people with more powerful hardware tend to focus on smaller models (maybe for coding autosuggestions or generating text for roleplaying games), and they use commercial GPTs for things where they want larger context windows or more general knowledge. Might be worth checking on this in a little while as people work on scaling models down and figuring out more things to do with open source models.

Anyway, those are my notes on this so far. Looking forward to reading more about how people are using LLMs in Emacs, considering all the things that Emacs can work with!

1 comment

Nagora

2024-01-23T15:07:39ZMy experience with GPT was initially quite positive but I've stopped using it for a couple of reasons.

The first is that I realised that they use quite a clever UI trick to make it feel more impressive. By having the answer come up as it it were being typed it feels more like it's thinking rather than picking random words that are associated with the one's already in the message (which is what it's actually doing). Psychologically, I found that this made me much more accepting of its mistakes than when I looked away and then read the completed results. By and large I would say that GPT-3 is about 80% accurate in the sense of producing a usable result that isn't a pack of lies. I wouldn't accept that from a human employee.

The second reason was hinted at above. Once I understood what was actually going on under the hood I could see that LLM will never produce new ideas except by accident. Each word is a statistical guess, not a considered thought. Some word combinations might spark a new idea in me, much like Bowie/Burroughs' "cut-up" technique, but the further I go in my questions from areas which have large sample sizes in its database the less likely it will come up with anything useful.

At that point it is just a search engine of other people's work, but with the added flaw that it will simply make stuff up from nowhere. It specifically recommended a pair of named NFS utilities to me to solve a problem and neither of them exist.

GPT-3 is for my purposes useless. I suspect that is the case for any such system which is trained on a very large and unfocused data set. But, more than that, I think that any system trained on a focused dataset will in fact end up being nothing more than a good search engine. The tighter the box, the less likely it is to think outside the box; the bigger the box, the more likely it is to put together things that don't belong.

Your examples of its output trying to imitate you are really terrible! It's just junk guesswork based on it sort of classifying you as a stereotypical enthusiastic computer geek with "Emacs" dropped in here or there. If you had posted any of that text I would have thought you were unwell or, more likely, that your account had been hacked and a bot had written it.