Letting A+ play with generative artificial intelligence for fiction, and a primer

Posted: - Modified: | ai: After experimenting with ChatGPT in depth, A+ has decided that she prefers Claude, so we'll try that.

A+ likes prompting Claude AI to generate funny kid-focused Star Wars fanfic mostly exploring how various characters might babysit Luke, Leia, and Grogu in a universe where Anakin did not fall to the Dark Side. This seems to be working out well. It encourages her to improvise stories even when we're off-screen, and it lets her play with small variations on ideas and quickly try things out. The stories that Claude generates are humorous and descriptive, with better dialogue and characterization than I might come up with myself. This is infinitely better than letting her wander around fanfiction.net on her own.

She often runs into the free tier's usage limits. There are plenty of other things she enjoys doing, so it's not a problem. She just goes on to read other things, practise singing, or colour. Still, we've been noodling around the idea of paying for a subscription, especially if we can help her get even more out of it. For example, maybe we could build some routines like:

- Daily: Tell me your favourite story of the day. What did you like about it? What was the problem and what was the solution?

- Weekly:

- Check in to see if you're all caught up with schoolwork

- Pick a story and let's draw a quick scene from it together; maybe I can compile it into a little PDF we can enjoy

- Once a month:

- Record one story out loud (~ 250 words, so a couple of minutes)

(I know, I know, it's pretty aspirational. But maybe I can sneak some of it in here and there, especially if I start doing it myself. Then she usually wants to join in.)

Prompt engineering

A+ currently writes prompts like:

- Write a funny story where Darth Vader has a bunch of body doubles (like Queen Amidala did) and they have a very important meeting. The Emperor is trying to find Darth Vader and keeps finding the body doubles instead.

- Write a silly story where Anakin raises Luke and Leia with Padme instead of turning to the dark side.

- Write a continuation of The Skywalker Family Chronicles where Anakin is helping Luke and Leia get dressed for a very important Bring Your Children to a Council meeting.

- Write a funny continuation of The Skywalker Family Chronicles about the time that Captain Rex brought the rest of the 501 Legion and Commander Cody's Legion to help babysit Luke and Leia.

- Write a continuation of The Skywalker Family Chronicles where Commander Cody has to babysit Luke and Leia because Captain Rex is dealing with leftover Separatist droids and has to call in reinforcements who run into the Skywalker Naboo Lake House, fully armed, and wearing their full armor, asking whether his problem is either rouge Separatists droids or the Skywalker children and bringing two bags of military equipment and two bags of Skywalker Specific Stress Depleters and other things for babysitting.

- Write a continuation of The Skywalker Family Chronicles where Grogu reads his journal, flipping to the page where Padme takes Ahsoka, Anakin, Yoda, Grogu, still talking and potty training, Luke, and Leia to a boat ride, but it turns out Yoda, Anakin, Luke, Ahsoka, and Leia get seasick, told from Grogu's point of view in detail.

- It was interesting to see this prompt evolve over 16 iterations starting from "Write a continuation of The Skywalker Family Chronicles where Padme takes Anakin, Luke, and Leia to a boat ride, but it turns out that boat rides become disasters for Skywalkers."

- Write a continuation of The Skywalker Family Chronicles where Ahsoka reads her journal, ending up on the page where she ends up at the Senate Medical Center due to some flu that only Togrutas can catch, the journal written in slightly squiggly handwriting, which is either Grogu or Anakin's handwriting.

The story Claude generated for that prompt

Ahsoka's Journal — Entry #47

[The handwriting is notably messy and inconsistent, with some letters larger than others and lines that don't quite stay straight across the page]

Dear Snips Journal Ahsoka's Top Secret Thoughts Today's Very Important Record of Events

Anakin here. Ahsoka is sick in bed with Togrutan Spotted Fever (which is apparently a thing??), so I'm writing this for her because she made me promise to "keep her journal updated" and threatened to tell Padmé about the speeder bike incident if I didn't. So here we are.

Medical droid says she'll be fine in a few days but needs rest. She keeps trying to get up to train, so I'm taking dictation between making her stay in bed. Her exact words: "Make sure you get everything right, Master, or I'll know."

This is ridiculous Here's what happened:

It started three days ago when Ahsoka's lekku developed these weird purple spots. She insisted it was "just a cosmetic phase all Togruta go through" and refused to see a healer. Then she collapsed during lightsaber practice, which was TERRIFYING, by the way. Not that I panicked. I was very calm and collected as I carried her across the Senate District while shouting for people to move out of the way.

The twins kept trying to "help" by Force-pushing pedestrians, which didn't actually help AT ALL. Leia claims "that man was going to step on Ahsoka's lekku" but I'm pretty sure she just wanted to see if she could push someone into a fountain. (She could.)

Luke tried to use the Force to make Ahsoka float "so you don't have to carry her, Dad" but only managed to make her boots come off. One hit Senator Organa right in the back of the head. I'll have to apologize for that later.

When we got to the Senate Medical Center, the droid at reception just stared at us like we were crazy. In my defense, it's not every day a former Jedi Knight runs in carrying an unconscious Togruta while two Force-sensitive five-year-olds make medical equipment float around the waiting room.

Padmé arrived looking perfect as always, somehow already briefed on the situation. She claims she "felt a disturbance in the Force, and that disturbance was you causing chaos, Anakin." She's not even Force-sensitive! How does she KNOW these things??

The medical droid diagnosed Ahsoka with "Togrutan Spotted Fever" which apparently only Togruta can catch. The spots spread all over her lekku and montrals before the fever kicked in. The droid said it's like "human chicken pox but confined to head-tails" which is both fascinating and disgusting.

Grogu seemed very interested in the spots, kept trying to touch them until Ahsoka made that scary growling noise. Now he brings her soup instead. At least I think it's soup. It's green and bubbling and I'm a little afraid to ask what's in it, but Ahsoka seems to like it.

Obi-Wan stopped by with "medicinal tea" which smelled terrible but made Ahsoka laugh so hard she snorted bacta solution out her nose. Something about a mission where I apparently got similar spots in an inappropriate location? I DON'T REMEMBER THIS AND IT NEVER HAPPENED.

Oh, Ahsoka wants me to add:

"Master Yoda visited and said 'Strong with the antibodies, you must be.' He brought me a weird root that tasted awful but made my headache go away. Also, tell the younglings the spots are NOT for coloring practice!" Speaking of which, I caught Luke trying to "connect the dots" on Ahsoka's lekku with a marker while she was sleeping. Leia was standing lookout. I'm simultaneously proud of their teamwork and horrified by their choices. Padmé has now banned everyone except medical droids from the sick room after Ahsoka's fever spiked when Rex brought the entire 501st to "boost morale." Apparently twenty clone troopers singing get-well songs isn't restful. Who knew?

Ahsoka is falling asleep now, but insists I note that "Anakin's nursin g skills are terri bl…"

She didn't finish that sentence because she's WRONG and also finally sleeping. I am an EXCELLENT caretaker! I've only spilled bacta solution twice, and the second time wasn't even my fault because R2 bumped into me. I should wrap this up. Padmé says my handwriting is getting worse, which I didn't think was possible. Also, Grogu is trying to add his own notes in the margins and I can't tell if those are drawings or actual words. Get better soon, Snips. The twins miss their "Auntie 'Soka" and I miss having someone else to blame when things go wrong.

— Anakin

[In the corner, there appears to be a small green handprint with what might be Grogu's attempt at writing "Get better" beside it]

All right, let's get a bit meta here. "Here are some prompts that my 9-year-old child has been using to generate funny Star Wars fanfiction in alternate timelines. Suggest 5 ideas for improving her prompts and provide detailed examples." We're out of free messages for Claude, so I asked ChatGPT.

It suggested:

- Be more specific about the chaos or conflict.

- Add unexpected reactions from characters.

- Use a "What If?" format to spark creativity.

- Introduce a clear perspective or narrator.

- Add an absurd or over-the-top element.

Full response including examples and details

Your child already has a fantastic imagination and a great sense of humor! Here are five general tips to help improve and refine her prompts for even funnier and more engaging Star Wars fanfiction:

1. Be More Specific About the Chaos or Conflict

- Funny stories often work best when there's a strong contrast or a big misunderstanding. Adding more details about what could go wrong will make the story even funnier.

- Example: Instead of: "Write a funny story where Darth Vader has a bunch of body doubles, and the Emperor keeps finding them instead." Try: "Write a story where Darth Vader has a dozen body doubles, but they're all terrible actors who keep revealing they're fakes in ridiculous ways. The Emperor gets increasingly frustrated as he accidentally promotes one to Grand Moff, challenges another to a lightsaber duel, and gets tricked by one into approving a Death Star Karaoke Night."

2. Add Unexpected Reactions From Characters

- One of the funniest things in fanfiction is when characters react in ways they normally wouldn't. Encourage your child to play with unexpected character reactions.

- Example: Instead of: "Write a silly story where Anakin raises Luke and Leia with Padmé instead of turning to the dark side." Try: "Write a story where Anakin raises Luke and Leia with Padmé, but he's the most overprotective parent in the galaxy. He installs lightsaber-proof baby gates, interrogates their playdates like a Jedi Council trial, and insists on Force-training them before they can walk. Meanwhile, Obi-Wan just shakes his head and drinks space tea."

3. Use a "What If?" Format to Spark Creativity

- Reframing prompts as "What if?" questions can help make them more engaging and open-ended.

- Example: Instead of: "Write a funny continuation where Captain Rex and the clones babysit Luke and Leia." Try: "What if the entire 501st Legion had to babysit Luke and Leia for a day, but they treated it like a high-stakes military mission? Their strategy meetings include creating 'Operation Diaper Storm,' using clone trooper formations for nap time, and having emergency evacuations when Leia gets hold of a blaster."

4. Introduce a Clear Perspective or Narrator

- Some of the best humor comes from seeing an event through the eyes of a character with a strong personality or a unique way of looking at things.

- Example: Instead of: "Write a story where Grogu reads his journal about a boat ride." Try: "Write a story from Grogu's journal where he describes (in broken baby Yoda language) a disastrous boat ride. Yoda, Anakin, Luke, and Leia all get seasick, Ahsoka tries to 'train' the fish, and Padmé regrets everything. Meanwhile, Grogu just wants snacks and writes about it like it's a Jedi prophecy."

5. Add an Absurd or Over-the-Top Element

- Taking an idea to the extreme can make it even funnier.

- Example: Instead of: "Write a story where Commander Cody has to babysit Luke and Leia." Try: "Write a story where Commander Cody has to babysit Luke and Leia, but he's so stressed that he calls in every trooper he knows for backup. Within an hour, the Skywalker Lake House is surrounded by AT-ATs, aerial support is patrolling for 'nap-time threats,' and Palpatine accidentally approves a new 'Elite Babysitting Clone Squad' after mistaking their report for a battle plan."

Encourage her to keep going with her hilarious and creative ideas! These small adjustments can make the prompts even more fun and engaging. 🚀

We read through this yesterday, and she's started telling me how she's added more detail to her prompts. I think she takes constructive feedback from the AI better than from me (just like using a timer works better than me telling her it's time to wrap up), so maybe there's some opportunity here on using a layer of indirection to keep the grumps from getting in the way of learning…

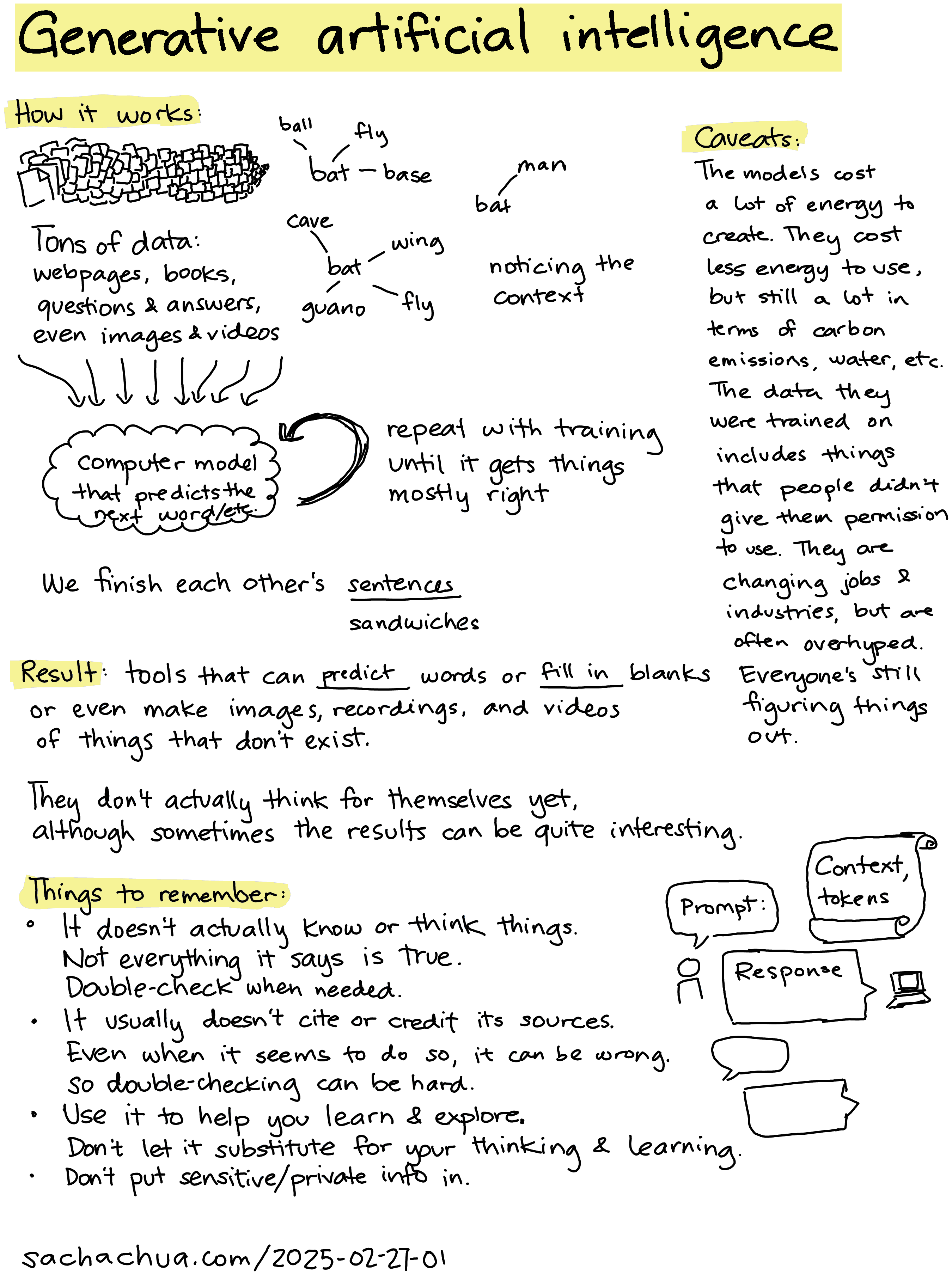

A primer on generative AI

I don't want A+ to treat generative AI as some kind of magic black box with amazing capabilities, so I've been sprinkling in some primer information here and there. I want her to understand roughly how it works, how it's not actually thinking but can still do a surprisingly good job of putting things together based on patterns derived from really large data sets in ways that are hard for humans to fully understand.

I also explained how conversations and token limits work. She's already getting the hang of editing prompts to refine things instead of adding more prompts to the conversation, although she still prefers to keep most of her story prompts in one conversation because the stories sometimes refer to previous incidents in the timeline.

Text from sketch

Generative artificial intelligence

How it works:

- Tons of data: webpages, books, questions & answers, even images & videos

- Computer model that predicts the next word/etc.

- noticing the context

- bat: ball, fly, base

- bat: cave, wing, guano, fly

- bat: man

- Repeat with training until it gets things mostly right

- We finish each other's sentences/sandwiches

Result: tools that can predict words or fill in blanks or even make images, recordings, and videos of things that don't exist.

They don't actually think for themselves yet; although sometimes the results can be quite interesting

Things to remember:

- It doesn't actually know or think things. Not everything it says is true. Double-check when needed.

- It usually doesn't cite or credit its sources. Even when it seems to do so, it can be wrong. So double-checking can be hard.

- Use it to help you learn & explore. Don't let it substitute for your thinking & learning.

- Don't put sensitive/private info in.

Caveats: The models cost a lot of energy to create. They cost less energy to use, but still a lot in terms of carbon emissions, water, etc. The data they were trained on includes things that people didn't give them permission to use. They are changing jobs & industries, but are often overhyped. Everyone's still figuring things out.

prompt, response context, tokens

sachachua.com/2025-02-27-01

Other resources:

- Generative AI explained in 2 minutes - YouTube: this is probably at her level

- Generative AI Explained In 5 Minutes | What Is GenAI? | Introduction To Generative AI | Simplilearn - YouTube: more focused on image generation

I also showed her what NotebookLM could do with audio podcast generation, so she could get a sense of computer-generated audio. She noticed the interactive mode, so we experimented with that for a bit. The pauses in her speech tend to trigger premature answers, so it works better if she thinks about her sentence and says it confidently.

Going forward

A+ finds generating text very engaging at the moment and I think she's learning a lot through experiments. She'll probably have a deeper understanding of and appreciation for fiction than I did as a kid because she can easily change some things and see how it feels. (What if this story is told in third-person perspective? What if this story is told in present tense or past tense? What if this event happens differently; what would happen because of that change?)

With any luck, exposure to lots of story elements, vivid words, and dialogue will influence how she writes. Maybe she'll eventually get to the point where she can imagine a story and then want to write parts of it or all of it herself to bring it closer to her vision. So it's probably worth supporting this and seeing where it can go.