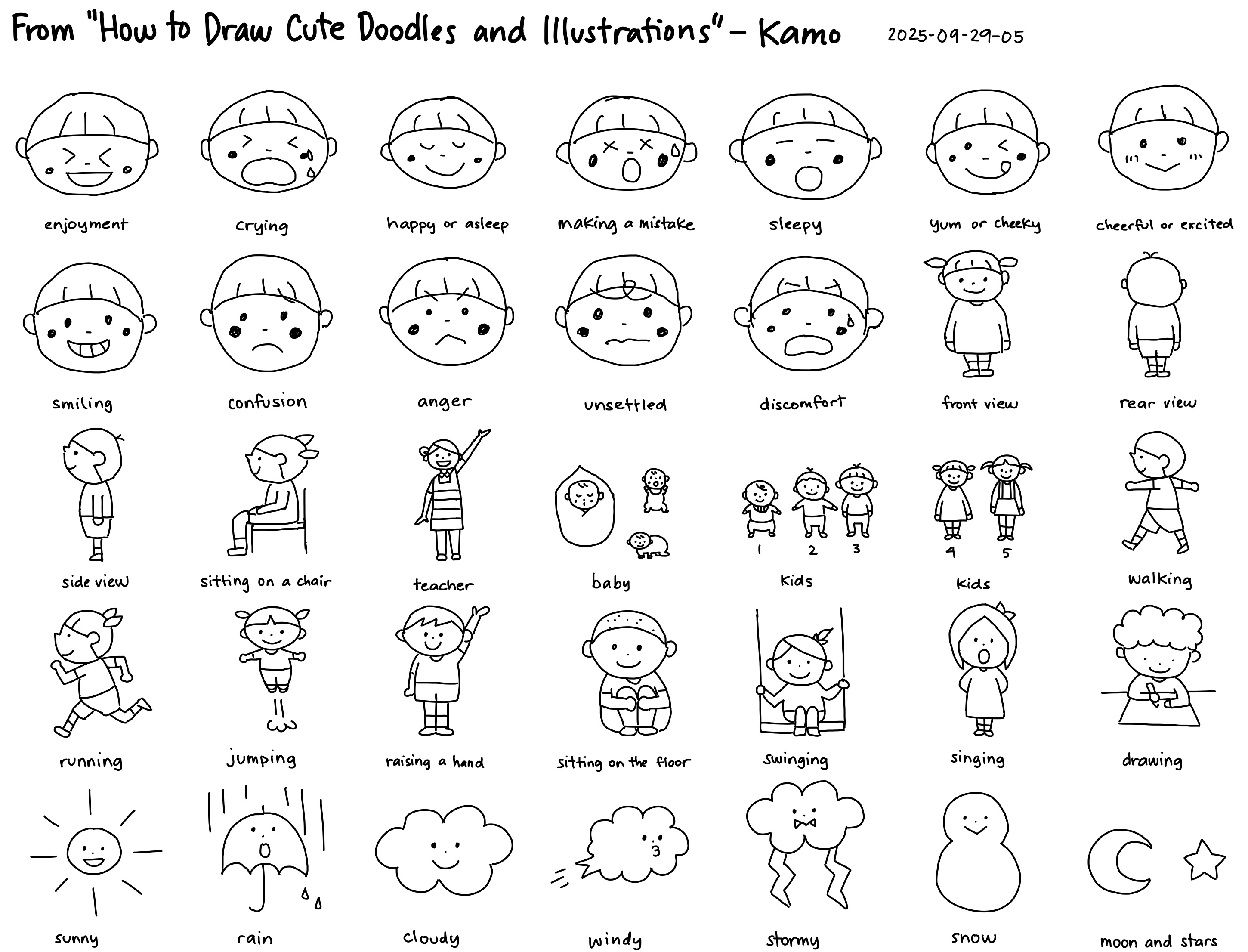

My curves are shaky. I'm mostly learning to ignore

that and draw anyway. Good thing redoing them is

a matter of a two-finger tap with my left

hand, and then I can redraw until it feels mostly

right. I try up to three times before I say, fine,

let's just go with that.

I often draw with my iPad balanced on my lap,

so there's an inherent wobbliness to it. I think

this is a reasonable trade-off. Then I can keep

drawing cross-legged in the shade at the

playground instead of sitting at the table in the

sun. The shakiness is still there when I draw on a

solid table, though. I have a Paperlike screen

protector, which I like more than the slippery

feel of the bare iPad screen. That helps a little.

It's possible to cover it up and pretend to

confidence that I can't draw with. I could smooth

out the shakiness of my curves by switching to

Procreate, which has more stylus sensitivity

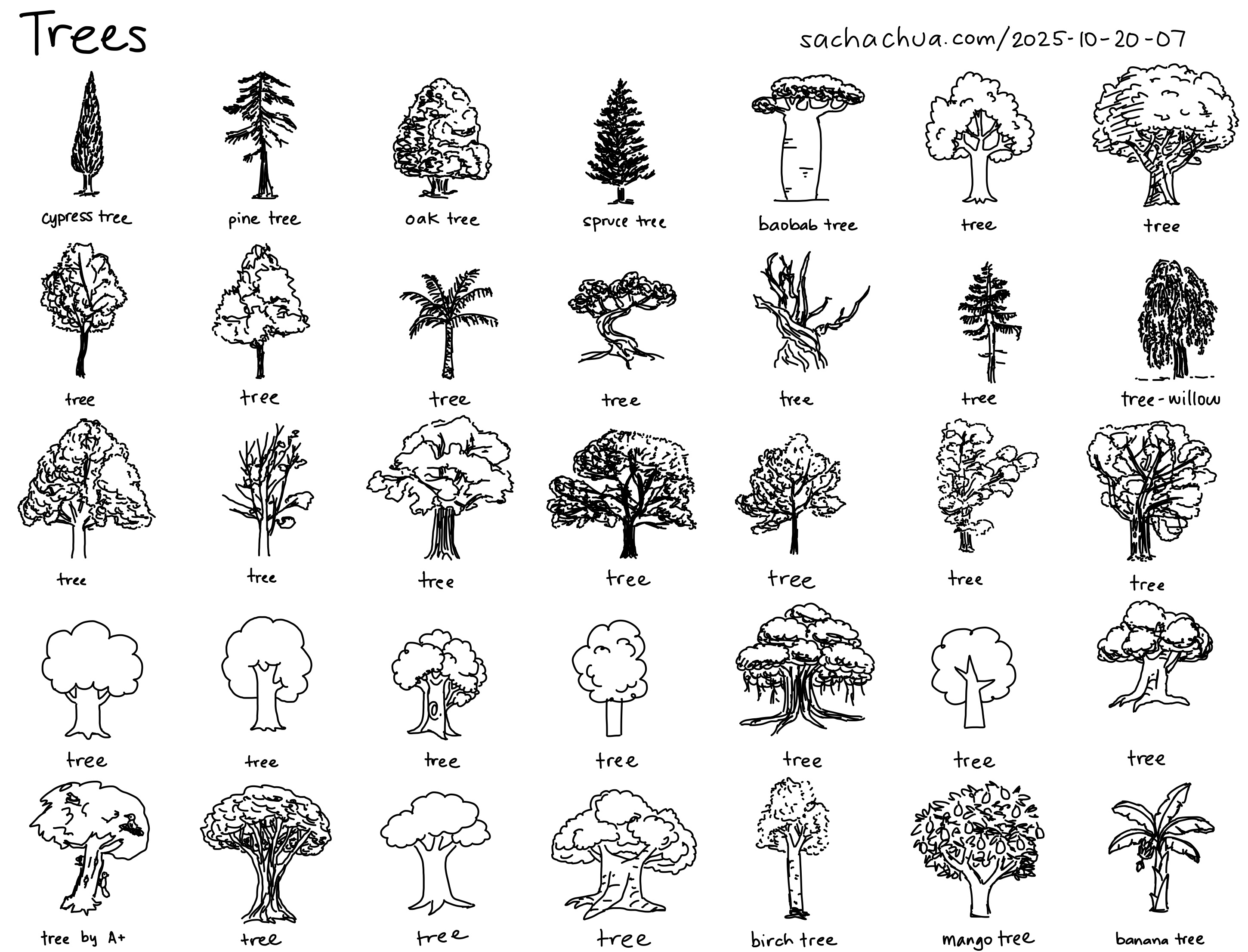

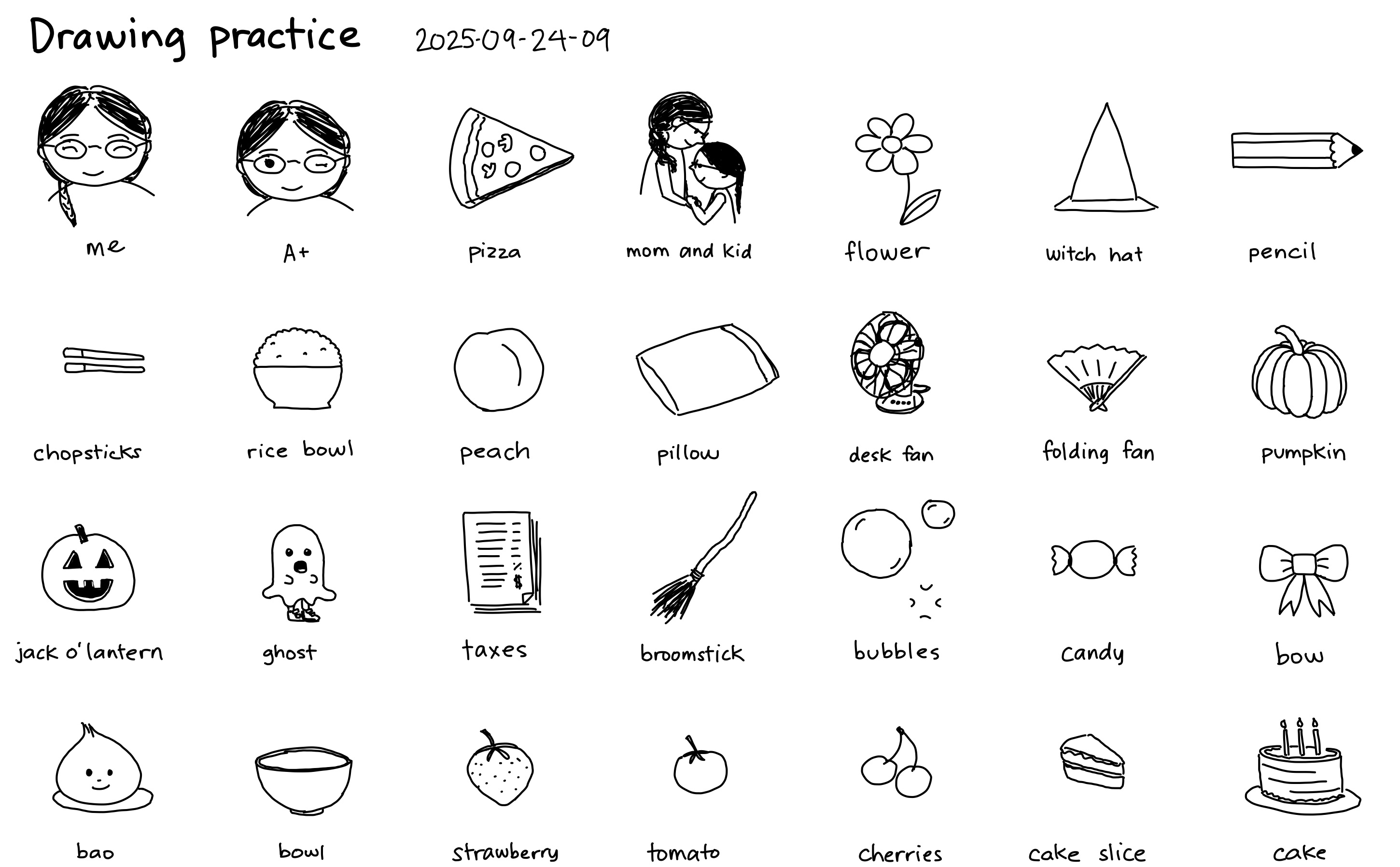

settings than Noteful does. A+ loves the way

Procreate converts her curves to arcs. She moves

the endpoints around to where she wanted to put

them. I'm tempted to do the same, but I see her

sometimes get frustrated when she tries to draw

without that feature, and I want to show her the

possibilities that come with embracing

imperfection. It's okay for these sketches to be a

little shaky. These are small and quick.

They don't have to be polished.

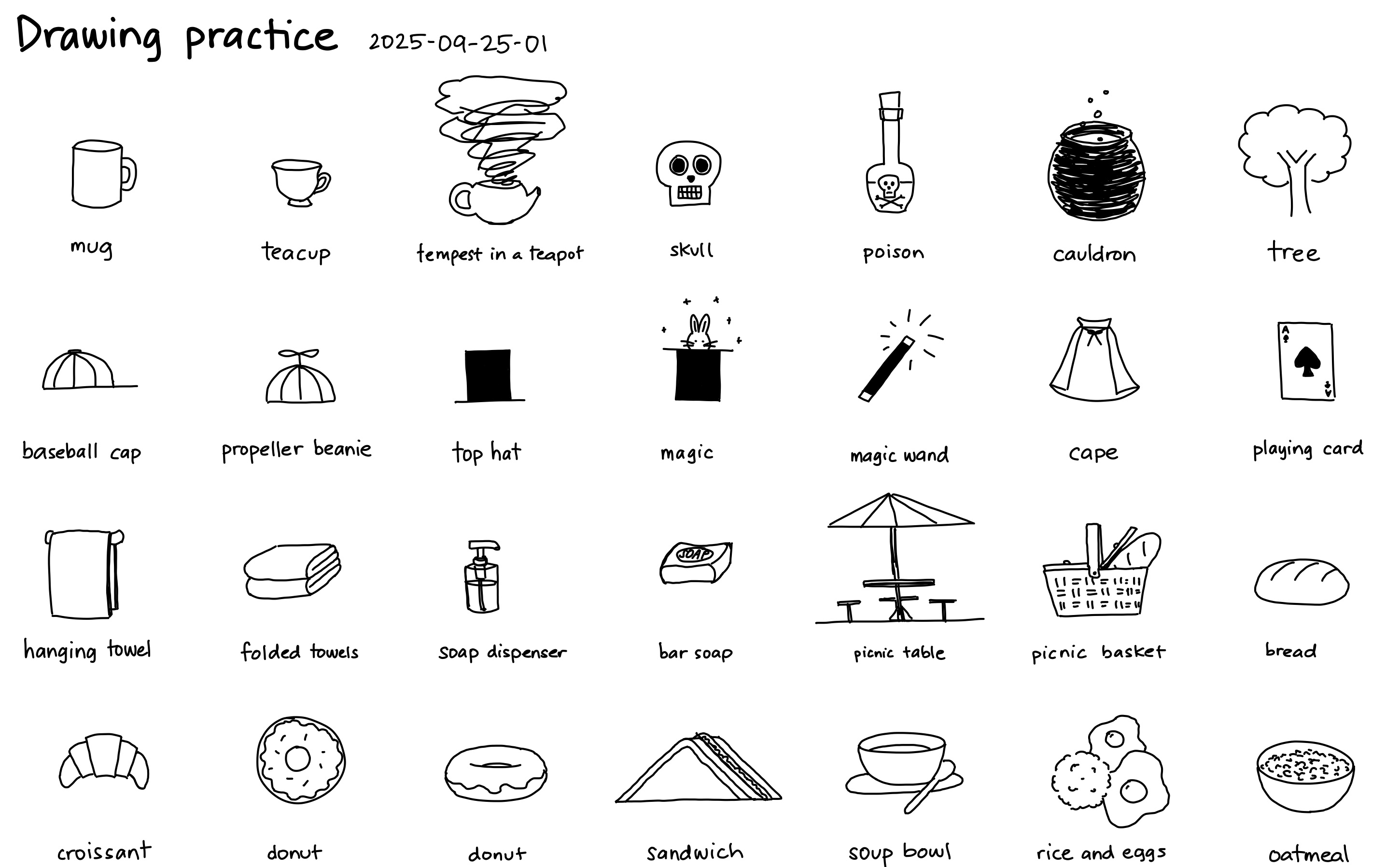

The Internet says to draw faster and with a looser

grip, and that lots of practice will build fine

motor skills. I'm not sure I'll get that much

smoother. I think of my mom and her Parkinson's

tremors, and I know that time doesn't necessarily

bring improvement. But it's better to keep trying

than to shy away from it. Maybe as I relax more

into having my own time, working on my own things

and moving past getting things done, I'll give

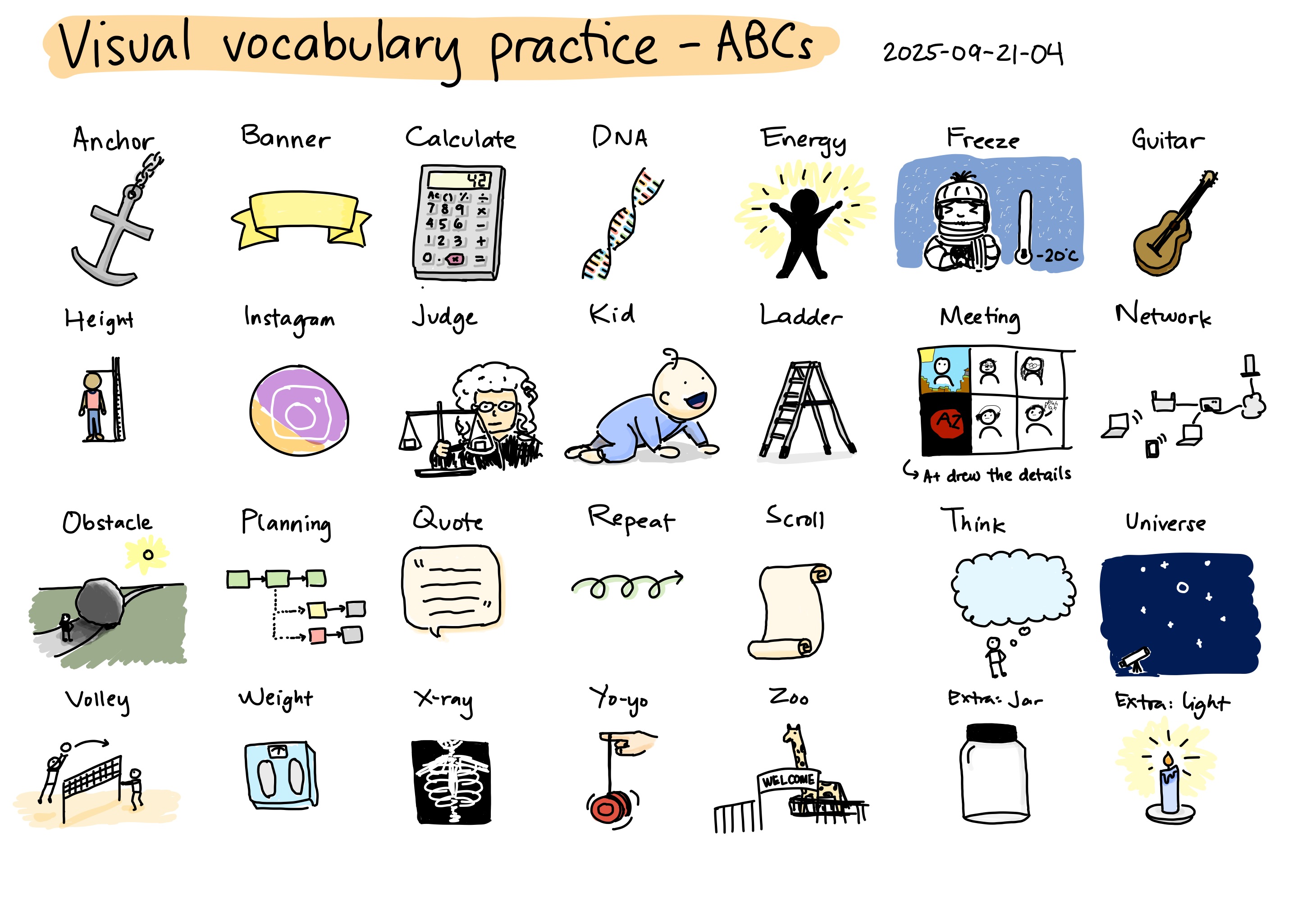

myself more time for drawing exercise, like

filling pages with just lines and circles.

%20--%20drawing%20icons.jpeg)

%20--%20drawing%20icons.jpeg)