EmacsConf 2025 notes

| emacs, emacsconfIntended audience: This is a long post (~ 8,400 words). It's mostly for me, but I hope it might also be interesting if you like to use Emacs to do complicated things or if you also happen to organize online conferences.

: Added a note about streaming from virtual desktops.

The videos have been uploaded and thank-you notes have been sent, so now I get to write quick notes on EmacsConf 2025 and reuse some of my code from last year's post.

- Stats

- Timeline

- Managing conference information in Org Mode and Emacs

- Communication

- Schedule

- Recorded introductions

- Recorded videos

- Captioning

- BigBlueButton web conference

- Checking speakers in

- Hosting

- Infrastructure

- Streaming

- Publishing

- Etherpad

- IRC

- Extracting the Q&A

- Budget

- Tracking my time

- Thanks

- Overall

Stats

While organizing EmacsConf 2025, I thought it was going to be a smaller conference compared to last year because of lots of last-minute cancellations. Now that I can finally add things up, I see how it all worked out:

| 2024 | 2025 | Type |

|---|---|---|

| 31 | 25 | Presentations |

| 10.7 | 11.3 | Presentation duration (hours) |

| 21 | 11 | Q&A web conferences |

| 7.8 | 5.2 | Q&A duration (hours) |

| 18.5 | 16.5 | Total |

EmacsConf 2025 was actually a little longer than 2024 in total presentation time, although that's probably because we had more live talks which included answering questions. It was almost as long overall including live Q&A in BigBlueButton rooms, but we did end an hour or so earlier each day.

Looking at the livestreaming data, I see that we had fewer participants compared to the previous year. Here are the stats from Icecast, the program we use for streaming:

- Saturday:

- gen: 107 peak + 7 lowres (compared to 191 in 2024)

- dev: 97 peak + 7 lowres (compared to 305 in 2024)

- Sunday: I forgot to copy Sunday stats, whoops! I think there were about 70 people on the general stream. Idea: Automate this next time.

The YouTube livestream also had fewer participants at the time of the stream, but that's okay. Here are the stats from YouTube:

| 2024 peak | 2025 peak | YouTube livestream |

|---|---|---|

| 46 | 23 | Gen Sat AM |

| 24 | 7 | Gen Sat PM |

| 15 | 8 | Dev Sat AM |

| 20 | 14 | Dev Sat PM |

| 28 | 14 | Gen Sun AM |

| 26 | 11 | Gen Sun PM |

Fewer people attended compared to last year, but it's still an amazing get-together from the perspective of being able to get a bunch of Emacs geeks in a (virtual) room. People asked a lot of questions over IRC and the Etherpads, and the speakers shared lots of extra details that we captured in the Q&A sessions. I'm so glad people were able to connect with each other.

Based on the e-mails I got from speakers about their presentations and the regrets from people who couldn't make it to EmacsConf, it seemed that people were a lot busier in 2025 compared to 2024. There were also a lot more stressors in people's lives. But it was still a good get-together, and it'll continue to be useful going forward.

At the moment, the EmacsConf 2025 videos have about 20k views total on YouTube. (media.emacsconf.org doesn't do any tracking.) Here are the most popular ones so far:

- EmacsConf 2025: Zettelkasten for regular Emacs hackers - Christian Tietze (he) (2.8k views)

- EmacsConf 2025: Emacs, editors, and LLM driven workflows - Andrew Hyatt (he/him) (1.9k)

- EmacsConf 2025: Modern Emacs/Elisp hardware/software accelerated graphics - Emanuel Berg (he/him) (1.2k)

- EmacsConf 2025: An introduction to the Emacs Reader - Divyá (835)

- EmacsConf 2025: Interactive Python programming in Emacs - David Vujic (he/him) (731)

While I was looking at the viewing stats on YouTube, I noticed that people are still looking at videos all the way back to 2013 (like Emacs Live - Sam Aaron and Emacs Lisp Development - John Wiegley), and many videos have thousands of views. Here are some of the most popular ones from past conferences:

- EmacsConf 2022: What I'd like to see in Emacs - Richard M. Stallman (54k views)

- EmacsConf 2019 - 26a - Emacs: The Editor for the Next Forty Years - Perry E. Metzger (pmetzger) (21k)

- EmacsConf 2019 - 32 - VSCode is Better than Emacs - Making Emacs More Approachable - Zaiste (19k)

- EmacsConf 2019 - 19 - Awesome Java editing environment - Torstein Krause Johansen (15k)

- EmacsConf 2022: Top 10 reasons why you should be using Eshell - Howard Abrams (he/him) (15k)

Views aren't everything, of course, but maybe they let us imagine a little about how many people these speakers might have been able to reach. How wonderful it is that people can spend a few days putting together their insights and then have that resonate with other people through time. Speakers are giving us long-term gifts.

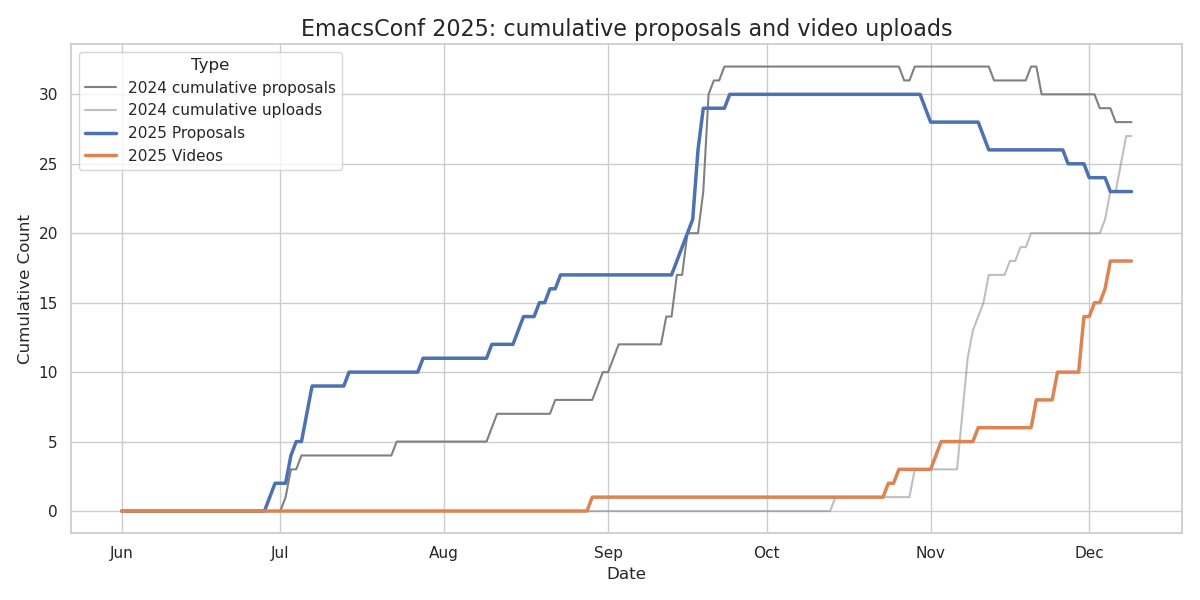

Timeline

Of course, the process of preparing all of that started long before the days of the conference. We posted the call for proposals towards the end of June, like we usually do, because we wanted to give people plenty of time to start thinking about their presentations. We did early acceptances again this year, and we basically accepted everything, so people could start working on their videos almost right away. Here's the general timeline:

| CFP | 2025-06-27 Fri |

| CFP deadline | 2025-09-19 Fri |

| Speaker notifications | 2025-09-26 Fri |

| Publish schedule | 2025-10-24 Fri (oops, forgot to do this elsewhere) |

| Video submission target date | 2025-10-31 Fri |

| EmacsConf | 2025-12-06 Sat - 2025-12-07 Sun |

| Live talks, Q&A videos posted | 2025-12-17 |

| Q&A notes posted | 2025-12-28 |

| These notes | 2026-01-01 |

This graph shows that we got more proposals earlier this year (solid blue line: 2025) compared to last year (gray: 2024), although there were fewer last-minute ones and more cancellations this year. Some people were very organized. (Video submissions in August!) Some people sent theirs in later because they first had to figure out all the details of what they proposed, which is totally relatable for anyone who's found themselves shaving Emacs yaks before.

I really appreciated the code that I wrote to create SVG previews of the schedule. That made it much easier to see how the schedule changed as I added or removed talks. I started stressing out about the gap between the proposals and the uploaded videos (orange) in November. Compared to last year, the submissions slowly trickled in. The size of the gap between the blue line (cumulative proposals) and the orange line (cumulative videos uploaded) was much like my stress level, because I was wondering how I'd rearrange things if most of the talks had to cancel at the last minute or if we were dealing with a mostly-live schedule. Balancing EmacsConf anxiety with family challenges resulted in all sorts of oopses in my personal life. (I even accidentally shrank my daughter's favourite T-shirt.) It helped to remind myself that we started off as a single-track single-day conference with mostly live sessions, that it's totally all right to go back to that, and that we have a wonderful (and very patient) community. I think speakers were getting pretty stressed too. I reassured speakers that Oct 31 was a soft target date, not a hard deadline. I didn't want to cancel talks just because life got busy for people.

It worked out reasonably well. We had enough capacity to process and caption the videos as they came in, even the last-minute uploads. Many speakers did their own captions. We ended up having five live talks. Live talks are generally a bit more stressful for me because I worry about technical issues or other things getting in the way, but all those talks went well, thank goodness.

For some reason, Linode doesn't seem to show me detailed accrued charges any more. I think it used to show them before, which is why I managed to write last year's notes in December. If I skip the budget section of these notes, maybe I can post them earlier, and then just follow up once the invoices are out.

I think the timeline worked out mostly all right this year. I don't think moving the target date for videos earlier would have made much of a difference, since speakers would probably still be influenced by the actual date of the conference. It's hard to stave off that feeling of pre-conference panic: Do we have enough speakers? Do we have enough videos? But these graphs might help me remember that it's been like that before and it has still worked out, so it might just be part of my job as an organizer to embrace the uncertainty. Most conferences do live talks, anyway.

Emacs Lisp code for making the table

(append '(("slug" "submitted" "uploaded" "cancelled") hline)

(sort (delq nil

(org-map-entries

(lambda ()

(list

(org-entry-get (point) "SLUG")

(org-entry-get (point) "DATE_SUBMITTED")

(org-entry-get (point) "DATE_UPLOADED")

(org-entry-get (point) "DATE_CANCELLED")))

"DATE_SUBMITTED={.}"))

:key (lambda (o) (format "%s - %s" (elt o 3) (elt o 2)))

:lessp #'string<) nil)

Python code for plotting the graph

import pandas as pd

import seaborn as sns

import matplotlib.pyplot as plt

import matplotlib.dates as mdates

import io

all_dates = pd.date_range(start='2025-06-01', end='2025-12-09', freq='D')

def convert(rows):

df = pd.DataFrame(rows, columns=['slug', 'proposal_date', 'upload_date', 'cancelled_date'])

df = df.map(lambda x: pd.NA if (x == [] or x == "nil") else x)

df['proposal_date'] = pd.to_datetime(df['proposal_date']).apply(lambda d: d.replace(year=2025))

df['upload_date'] = pd.to_datetime(df['upload_date']).apply(lambda d: d.replace(year=2025))

df['cancelled_date'] = pd.to_datetime(df['cancelled_date']).apply(lambda d: d.replace(year=2025))

prop_counts = df.groupby('proposal_date').size().reindex(all_dates, fill_value=0).cumsum()

additions = df[df['proposal_date'].notna()].groupby('proposal_date').size().reindex(all_dates, fill_value=0)

subtractions = df[df['cancelled_date'].notna()].groupby('cancelled_date').size().reindex(all_dates, fill_value=0)

upload_counts = df[df['upload_date'].notna()].groupby('upload_date').size().reindex(all_dates, fill_value=0).cumsum()

prop_counts = (additions - subtractions).cumsum()

return prop_counts, upload_counts

prop_counts, upload_counts = convert(current_data)

prop_counts_2024, upload_counts_2024 = convert(previous_data)

plot_df = pd.DataFrame({

'Date': all_dates,

'2025 Proposals': prop_counts.values,

'2025 Videos': upload_counts.values,

})

plot_df_melted = plot_df.melt(id_vars='Date', var_name='Type', value_name='Count')

sns.set_theme(style="whitegrid")

plt.figure(figsize=(12, 6))

plt.plot(all_dates, prop_counts_2024, color='gray', label='2024 cumulative proposals')

plt.plot(all_dates, upload_counts_2024, color='gray', alpha=0.5, label='2024 cumulative uploads')

ax = sns.lineplot(data=plot_df_melted, x='Date', y='Count', hue='Type', linewidth=2.5)

ax.xaxis.set_major_locator(mdates.MonthLocator())

ax.xaxis.set_major_formatter(mdates.DateFormatter('%b'))

plt.title('EmacsConf 2025: cumulative proposals and video uploads', fontsize=16)

plt.xlabel('Date', fontsize=12)

plt.ylabel('Cumulative Count', fontsize=12)

plt.tight_layout()

plt.savefig('submissions_plot.png')

Managing conference information in Org Mode and Emacs

Organizing the conference meant keeping track of lots of e-mails and lots of tasks over a long period of time. To handle all of the moving parts, I relied on Org

Mode in Emacs to manage all the conference-related information.

This year, I switched to mainly using the general

organizers notebook, with the year-specific one

mostly just used for the draft schedule.

I added some shortcuts to jump to headings in the

main notebook or in the annual notebook (emacsconf-main-org-notebook-heading and emacsconf-current-org-notebook-heading emacsconf.el).

As usual, we used lots of structured entry properties to

store all the talk information.

Most of my functions from last year worked out

fine, aside from the occasional odd bug when I

forgot what property I was supposed to use. (:ROOM:, not :BBB:…)

The validation functions I thought about last year

would have been nice to have, since I ran into a

minor case-sensitivity issue with the Q&A value

for one of the talks (live versus Live).

This happened last year too and it should have

been caught by the case-fold-search I thought I

added to my configuration, but since that didn't

seem to kick in, I should probably also deal with

it on the input side.

Idea: I want to validate that certain fields

like QA_TYPE have only a specified set of

values.

I also probably need a validation function to make

sure that newly-added talks have all the files

that the streaming setup expects, like an overlay

for OBS, and one that checks if I've set any

properties outside the expected list.

Communication

After some revision, the call for participation went out on emacs-tangents, Emacs News, emacsconf-discuss, emacsconf-org, Reddit, lobste.rs, and System Crafters. (Thanks!) People also mentioned it in various meetups, and there were other threads leading up to it (Reddit, HN, lemmy.world, @fsf)

- EmacsConf CFP ending and a completing-read example

- Event - EmacsConf — Free Software Foundation — Working together for free software

- My eepitch-send, actions and the situation calculus

- EmacsConf/ 2025 Online Conference am Wochenende - dem 6. und 7. Dez. 25 - debianforum.de

Once the speakers confirmed the tentative schedules worked for them, I published the schedule on emacsconf.org on Oct 24, as sceduled. But I forgot to announce the schedule on emacsconf-discuss and other places, though. I probably felt a little uncertain about announcing the schedule because it was in such flux. There was even a point where we almost cancelled the whole thing. I also got too busy to reach out to podcasts. I remembered to post it to foss.events, though! Idea: I can announce things and trust that it'll all settle down. I can spend some time neatening up our general organizers-notebook so that there's more of a step-by-step-process. Maybe org-clone-subtree-with-time-shift will be handy.

There were also a few posts and threads afterwards:

- Zettelkasten for Regular Emacs Hackers – EmacsConf 2025 talk — Zettelkasten Forum

- Some problems of modernizing Emacs (eev @ EmacsConf 2025)

- EmacsConf 2025: Interactive Python programming in Emacs - David Vujic (he/him) | David Vujic

- HN: My favorite talks from emacsconf 2025

- Exploring Speculative JIT Compilation for Emacs Lisp with Java | Kyou is kyou is kyou is kyou

For communicating with speakers and volunteers, I reused the mail merge from previous years, with a few more tweaks. I added a template for thanking volunteers. I added some more code for mailing all the volunteers with a specific tag. Some speakers were very used to the process and did everything pretty independently. Other speakers needed a bit more facilitation.

I could get better at coordinating with people who want to help. In the early days, there wasn't that much to help with. A few people volunteered to help with captions for specific videos, so I waited for their edits and focused on other tasks first. I didn't want to pressure them with deadlines or preempt them by working on those when I'd gotten through my other tasks, but it was hard to leave those tasks dangling. They ended up sending in the edits shortly before the conference, which still gave me enough time to coordinate with speakers to review the captions. It was hard to wait, but I appreciate the work they contributed. In late November and early December, there were so many tasks to juggle, but I probably could have e-mailed people once a week with a summary of the things that could be done. Idea: I can practice letting people know what I'm working on and what tasks remain, and I can find a way to ask for help that fits us. If I clean up the design of the backstage area, that might make it easier for people to get started. If I improve my code for comparing captions, I can continue editing subtitles as a backup if I want to take a break from my other work, and merge in other people's contributions when they send them.

I set up Mumble for backstage coordination with other organizers, but we didn't use it much because most of the time, we were on air with someone, so we didn't want to interrupt each other.

There was a question about our guidelines for conduct and someone's out-of-conference postings. I forwarded the matter to our private mailing list so that other volunteers could think about it, because at that point in time, my brain was fried. That seemed to have been resolved. Too bad there's no edebug-on-entry for people so we can figure things out with more clarity. I'm glad other people are around to help navigate situations!

Schedule

Like last year, we had two days of talks, with two tracks on the first day, and about 15-20 minutes between each talk. We had a couple of last-minute switches to live Q&A sessions, which was totally fine. That's why I set up all the rooms in advance.

A few talks ended up being a bit longer than their proposed length. With enough of a heads-up, I could adjust the schedule (especially as other talks got cancelled), but sometimes it was difficult to keep track of changes as I shuffled talks around. I wrote some code to calculate the differences, and I appreciate how the speakers patiently dealt with my flurries of e-mails.

Scheduling some more buffer time between talks might be good. In general, the Q&A time felt like a good length, though, and it was nice that people had the option of continuing with the speaker in the web conference room. So it was actually more like we had two or three or four tracks going on at the same time.

Having two tracks allowed us to accept all the talks. I'm glad I kept the dev track to a single day, though. I ended up managing things all by myself on Sunday afternoon, and that was hard enough with one track. Fortunately, speakers were comfortable navigating the Etherpad questions themselves and reading the questions out loud. Sometimes I stepped in during natural pauses to read the next question on the list (assuming I managed to find the right window among all the ones open on my screen).

Idea: If I get better at setting up one window per ongoing Q&A session and using the volume controls to spatialize each so that I can distinguish left/middle/right conversations, it might be easier for me to keep track of all of those. I wasn't quite sure how to arrange all the windows I wanted to pay attention even with an external screen (1920x1200 + my laptop's 1920x1080). I wonder if I'd trust EXWM to handle tiling all the different web browser windows, while keeping the VNC windows floating so that they don't mess with the size of the stream. Maybe I can borrow my husband's ultrawide monitor. Maybe I can see if I can use one of those fancy macropads for quick shortcuts, like switching to a specified set of windows and unmuting myself. Maybe I can figure out how to funnel streaming captions into IRC channels or another text form (also a requested feature) so that I can monitor them that way and quickly skim the history… Or I can focus on making it easier for people to volunteer (and reminding myself that it's okay to ask for their help!), since then I don't have to split my attention all those different ways and I get to learn from other people's awesomeness.

If we decide to go with one track next year, we might have to prioritize talks, which is hard especially if some of the accepted speakers end up cancelling anyway. Alternatively, a better way might be to make things easier for last-minute volunteers to join and read the questions. They don't even need any special setup or anything; they can just join the BigBlueButton web conference session and read from the pad. Idea: A single Etherpad with all the direct BBB URLs and pad links will make this easier for last-minute volunteers.

Also like last year, we added an open mic session to fill in the time from a last-minute cancellation, and that went well. It might be nice to build that into the schedule earlier instead of waiting for a cancellation so that people can plan for it. We moved the closing remarks earlier as well so that people didn't have to stay up so late in other timezones.

I am super, super thankful we had a crontab automatically switching between talks, because that meant one less time-sensitive thing I had to pay attention to. I didn't worry about cutting people off too early because people could continue off-stream, although this generally worked better for Q&A sessions done over BigBlueButton or on Etherpad rather than IRC. I also improved the code for generating a test schedule so that I could test the automatic switching.

It was probably a good thing that I didn't automatically write the next session time to the Etherpad, though, because people had already started adding notes and questions to the pad before the conference, so I couldn't automatically update them as I changed the schedule. Idea: I can write a function to copy the heads-up for the next talk, or I can add the text to a checklist so that I can easily copy and paste it. Since I was managing the check-ins for both streams as well as doing the occasional bit of hosting, a checklist combining all the info for all the streams might be nice. I didn't really take advantage of the editability of Etherpad, so maybe putting it into HTML instead will allow me to make it easier to skim or use. (Copy icons, etc.)

Like before, I offset the start of the dev track to give ourselves a little more time to warm up, and I started Sunday morning with more asynchronous Q&A instead of web conferences. Not much in terms of bandwidth issues this year.

We got everyone's time constraints correctly this year, hooray! Doing timezone conversions in Emacs means I don't have to calculate things myself, and the code for checking the time constraints of scheduled sessions worked with this year's shifting schedules too. Idea: It would be great to mail iCalendar files (.ics) to each speaker so that they can easily add their talk (including check-in time, URLs, and mod codes) to their calendar. Bonus points if we can get it to update previous copies if I've made a change.

This is what the schedule looked like:

(with-temp-file (expand-file-name "schedule.svg" emacsconf-cache-dir)

(let ((emacsconf-use-absolute-url t))

(svg-print

(emacsconf-schedule-svg

800 300

(emacsconf-publish-prepare-for-display

(emacsconf-get-talk-info))))))

I added a vertical view to the 2025 organizers notebook, which was easier to read. I also added some code to handle cancelling a talk and keeping track of rescheduled talks.

Idea: I still haven't gotten around to localizing times on the watch pages. That could be nice.

Recorded introductions

Recording all the introductions beforehand was extremely helpful. I used subed-record.el to record and compile the audio without having to edit out the oopses manually. Recording the intros also gave me something to do other than worry about missing videos.

A few speakers helped correct the pronunciations of their names, which was nice. I probably could have picked up the right pronunciation for one of them if I had remembered to check his video first, as he had not only uploaded a video early but even said his name in it. Next time, I can go check that first.

This year, all the intros played the properly corrected files along with their subtitles. The process is improving!

Recorded videos

As usual, most speakers sent in pre-recorded videos this year, although it took them a little bit longer to do them because life got busy for everyone. Just like last year, speakers uploaded their files via PsiTransfer. I picked up some people's videos late because I hadn't checked. I've modified the upload instructions to ask the speakers to email me when they've uploaded their file, since I'm not sure that PsiTransfer can automatically send email when a new file has been uploaded. We set up a proper domain name, so this year, people didn't get confused by trying to FTP to it.

I had to redo some of the re-encodings and ask one speaker to reupload is video, but we managed to catch those errors before they streamed.

I normalized all the audio myself this year. I used Audacity to normalize it to -16 LUFS. I didn't listen to everything in full, but the results seemed to have been alright. Idea: Audio left/right difference was not an issue this year, but in the future, I might still consider mixing the audio down to mono.

All the files properly loaded from the cache directory instead of getting shadowed by files in other directories, since I reused the process from last year instead of switching mid-way.

People didn't complain about colour smearing, but it looks like the Calc talk had some. Ah! For some reason, MPV was back on 0.35, so now I've modified our Ansible playbook so that it insists on 0.38. I don't think there were any issues about switching between dark mode or light mode.

There was one last-minute upload where I wasn't sure whether there were supposed to be captions in the first part. When I toggled the captions to try to reload them once I copied over the updated VTT, I think I accidentally left them toggled them off, but fortunately the speaker let me know in IRC.

For the opening video, I made the text a bit more generic by removing the year references so that I can theoretically reuse the audio next year. That might save me some time.

I modified our mpv.conf to display the time remaining in the lower right-hand corner. This was a fantastic idea that made it easy to give the speaker a heads-up that their recorded talk was about to finish and that we were about to switch over to the live Q&A session. Because sometimes we weren't able to spare enough attention to host a session, I usually just added the time of the next session to the Etherpad to give speakers a heads-up that the stream would be switching away from their Q&A session. Then I didn't need to make a fancy Javascript timer either.

Captioning

The next step in the process was to caption each uploaded video. While speech recognition tools give us a great headstart, there's really no way around the work of getting technical topics right.

We used WhisperX for speech-to-text again. This time, I used the --initial_prompt argument

to try to get it to spell things properly: "Transcribe this talk about Emacs. It may mention Emacs keywords such as Org Mode, Org Roam, Magit, gptel, or chatgpt-shell, or tech keywords such as LLMs. Format function names and keyboard shortcut sequences according to Emacs conventions using Markdown syntax. For example: control h becomes \`C-h\`.". It did not actually do the keyboard shortcut sequences. (Emacs documentation is an infinitesimal fraction of the training data, probably!) It generally did a good job of recognizing Emacs rather than EMAX and capitalizing things. Idea: I can experiment with few-shot prompting techniques or just expand my-subed-fix-common-errors to detect the patterns.

This year, we used Anush V's sub-seg tool to split the subtitles into reasonably short, logically split phrases. I used to manually split these so that the subtitles flowed more smoothly, or if I was pressed for time, I left it at just the length-based splits that WhisperX uses. sub-seg was much nicer as a starting point, so I wrote a shell script to run it more automatically. It still had a few run-on captions and there were a couple of files that confused it, so I manually split those.

Shell script for calling sub-seg

#!/bin/bash ~/vendor/sub-seg/.venv/bin/python3 ~/vendor/sub-seg/src/prediction.py "$1" tmp/subseg-predict.txt ~/vendor/sub-seg.venv/bin/python3 ~/vendor/sub-seg/src/postprocess_to_single_lines.py "\(1" /tmp/subseg-predict.txt /tmp/subseg-cleaned.txt grep -v -e "^\)" /tmp/subseg-cleaned.txt > "${1%.*}–backstage–split.txt"

I felt that it was also a good idea to correct the timing before posting the subtitles for other people to work on because otherwise it would be more difficult for any volunteers to be able to replay parts of the subtitles that needed extra attention. WhisperX often gave me overlapping caption timestamps and it didn't always have word timestamps I could use instead. I used Aeneas to re-align the text with the audio so that we could get better timestamps. As usual, Aeneas got confused by silences or non-speech audio, so I used my subed-word-data.el code to visualize the word timing and my subed-align.el code to realign regions. Idea: I should probably be able to use the word data to realign things semi-automatically, or at least flag things that might need more tweaking. There were a few chunks that weren't recognized at all, but I was able to spot them and fix them when I was fixing the timestamps. Making this more automated will make it much easier to share captioning work with other volunteers. Alternatively, I can also post the talks for captioning before I fix the timestamps, because I can fix them while other people are editing the text.

While I was correcting the timestamps, it was pretty tempting for me to just go ahead and fix whatever errors I encountered along the way. From there on, it seemed like only a little bit more work was needed in order to get it ready for the speaker to review, and besides, doing subtitles is one of the things I enjoy about EmacsConf. So the end result is that for most of the talks, by the time I finished getting it to the point where I felt like someone who was new to captioning could take over, it was often quite close to being done. I did actually manage to successfully accept some people's help. The code that I wrote for showing me the word differences between two files (sometimes the speaker's script or the subtitles that were edited by another volunteer) was very useful for last-minute merges.

Working on captions is one of my favourite parts. Well-edited captions are totally a nice-to-have, but I like it when people can watch the videos (or skim them) and not worry about not being able to hear or understand what someone said. I enjoy sitting down and spending time with people's presentations, turning them into text that we can search. Captioning helps me feel connected with some of the things I love the most about the Emacs community.

I really appreciated how a number of speakers and volunteers helped with quality control by watching other videos in the backstage area. Idea: I can spend some time improving the design of the backstage area to make it more pleasant and to make it easier for people to find something to work on if they have a little time.

Of course, some talks couldn't be captioned beforehand because they were live. For live conferences and for many of the Q&A sessions, we used BigBlueButton.

Checking speakers in

To keep the live talks and Q&A sessions flowing smoothly, there's a bit of a scramble behind the scenes. We asked speakers to check in at least half an hour before they need to go live, and most of them were able to find their way to the BigBlueButton room using the provided moderator codes. I mostly handled the check-ins, with some help from Corwin when two people needed to be checked in at the same time. We generally didn't have any tech issues, although a couple of people were missing during their Q&A sessions. (We just shrugged and continued; too much to take care of to worry about anything!)

Check-ins are usually good opportunities to chat with speakers, but because I needed to pay attention to the other streams as well as check in other people, it felt a bit more rushed and less enjoyable. I missed having those opportunities to connect, but I'm glad speakers were able to handle so much on their own.

Hosting

Corwin did a lot of the on-stream hosting with a little help from Amin on Saturday. I occasionally jumped in and asked the speakers questions from the pad, but when I was busy checking people in or managing other technical issues cropping up, the speakers also read the questions out themselves so that I could match things up in the transcript afterwards.

No crashes this time, I think. Hooray!

Infrastructure

I used September and October to review all the infrastructure and see what made sense to upgrade. The BigBlueButton instance is on a virtual private server that's dedicated to it, since it doesn't like to share with any other services. Since I set it up from scratch following the recommended configuration, the server uses a recent version of Ubuntu. Some of the other servers use outdated Debian distributions that no longer get security updates. They have other services on them, so I'll leave them to bandali to upgrade when he's ready.

Streaming

I am so, so glad that we had our VNC+OBS setup this year, with two separate user accounts using OBS to stream each track from a virtual desktop on a remote server. We switched to this setup in 2022 so that I could handle multiple tracks without worrying about last-minute coordination or people's laptop specs. If we had had our original setup in 2019, with hosts streaming from their personal computers, I think we'd have been dead in the water. Instead, our scripts took care of most of the on-screen actions, so I just needed to rearrange the layout. (Idea: If I can figure out how to get BigBlueButton to use our preferred layout, that would make it even better.)

I used MPV to monitor the streams in little thumbnails. I set those windows to always be on top, and I set the audio so that the general track was on my left side and the development track was o my right. I used some shortcuts to jump between streams reliably, taking advantage of how I bound the Windows key on my laptop to the Super modifier. I used KDE's custom keyboard shortcuts to set Super-g to raise all of my general-track windows and Super-d to do the same for all of the development-track ones. Those were set to commands like this:

/home/sacha/bin/focus_or_launch "emacsconf-gen - TigerVNC"; /home/sacha/bin/focus_or_launch gen.webm; /home/sacha/bin/focus_or_launch "gen.*Konsole"

Code for focus_or_launch

#!/bin/bash

############# GLOBVAR/PREP ###############

Executable="\(1" ExecutableBase="\)(basename "$Executable")" Launchcommand="$2" Usage="\ Usage: $(basename $0) command [launchcommand] [exclude] E.g.: $(basename $0) google-chrome\ " ExcludePattern="$3"

################ MAIN ####################

if ; then MostRecentWID="$(printf "%d" $(wmctrl -xl | grep "$ExecutableBase" | tail -1 2> /dev/null | awk '{print \(1}'))" else MostRecentWID="\)(printf "%d" $(wmctrl -xl | grep -ve "$ExcludePattern" | grep "$ExecutableBase" | tail -1 2> /dev/null | awk '{print $1}'))" fi

if ; then if ; then "$Executable" > /dev/null 2>&1 & else $LaunchCommand > /dev/null 2>&1 & fi disown else if xdotool search –class "\(ExecutableBase" | grep -q "^\)(xdotool getactivewindow)$"; then xdotool sleep 0.050 key "ctrl+alt+l" else

xdotool windowactivate "$MostRecentWID" 2>&1 | grep failed \ && xdotool search –class "$ExecutableBase" windowactivate %@ fi fi

(Idea: It would be great to easily assign specific windows to shortcuts on my numeric keypad or on a macropad. Maybe I can rename a window or manually update a list that a script can read…)

To get the video streams out to viewers, we used Icecast (a streaming media server) on a Linode 64GB 16 core shared CPU server again this year, and that seemed to work out. I briefly contemplated using a more modern setup like nginx-rtmp, Ant Media Server, or SRS if we wanted HLS (wider support), adaptive bitrate streaming, or lower latency, but I decided against it because I didn't want to add more complexity. Good thing too. This year would not have been a good time to experiment with something new.

Like before, watching the stream directly using mpv, vlc, or ffplay was smoother than watching it through the web-based viewers. One of the participants suggested adding more detailed instructions for VLC so that people can enjoy it even without using the command-line, so we did.

The 480p stream and the YouTube stream were all right, although I forgot to start one of the YouTube streams until a bit later. Idea: Next time, I can set all the streams to autostart.

Corwin had an extra server lying around, so I used that to restream to Toobnix just in case. That seems to have worked, although I didn't have the brainspace to check on it.

I totally forgot about displaying random packages on the waiting screen. Idea: Maybe next year I can add a fortune.txt to the cache directory with various one-line Emacs tips.

I might be able to drop the specifications down to 32GB 8 core if we wanted to.

Publishing

People appreciated being able to get videos and transcripts as soon as each talk aired. Publishing the video files and transcripts generally worked out smoothly, aside from a few times when I needed to manually update the git repository. I modified the code to add more links to the Org files and to walk me through publishing videos to YouTube.

I uploaded all the videos to YouTube and scheduled them so that I didn't have to manage that during the conference. I did not get around to uploading them to Toobnix until after the conference since dealing with lots of duplicated updates is annoying. I've been writing a few more functions to work with the Toobnix API, so it might be a little easier to do things like update descriptions or subtitles next year.

Etherpad

As usual, we used Etherpad to collect questions from conference partcipants, and many speakers answered questions there as well.

I used the systemli.etherpad Ansible role to upgrade to Etherpad 2.5. This included some security fixes, so that was a relief.

I added pronouns and pronunciations to the Etherpad to make it easier for hosts to remember just in case.

I did not get to use emacsconf-erc-copy to copy IRC to Etherpad because I was too busy juggling everything else, so I just updated things afterwards.

Idea: Just in case, it might be good to have a backup plan in case I need to switch Etherpad to authenticated users or read-only use. Maybe I can prepare questions beforehand, just in case we get some serious griefing.

IRC

The plain-text chat channels on IRC continued to be a great place to discuss things, with lots of discussions, comments, and encouraging feedback. My automated IRC system continued to do a good job of posting the talk links, and the announcements made it easier to split up the log by talk.

Planning for social challenges is harder than planning for technical ones, though. libera.chat has been dealing with spam attacks recently. Someone's also been griefing #emacs and other channels via the chat.emacsconf.org web interface, so we decided to keep chat.emacsconf.org dormant until the conference itself. If this is an issue next year, we might need to figure out moderation. I'd prefer to not require everyone to register accounts or be granted voice permissions, so we'll see.

Extracting the Q&A

We recorded all the Q&A sessions so that we could post them afterwards. As mentioned, I only started the recording late once. Progress! I generally started it a few minutes early. As I got more confident about paying attention to the start of a session and rearranging the layout on screen, I also got better at starting the recording shortly before turning it over to the speaker. That made it easier to trim the recordings afterwards.

It took me a little longer to get to the Q&A sessions this year. Like last year, getting the recordings from BigBlueButton was fairly easy because I could get a single processed video instead of combining the audio, screenshare, and webcam video myself. This year the single-file video downloads were .m4v files, so I needed to modify things slightly. I think my workflow last year assumed I started with .webm files. Anyway, after some re-encoding, I got it processed. Now I've modified the /usr/local/bigbluebutton/core/scripts/video.yml to enable webm. I wonder if it got wiped after my panic-reinstall after November's OrgMeetup. Idea: I should add the config to my Ansible so that it stays throughout reinstalls and upgrades.

I wrote a emacsconf-subed-copy-current-chapter-text function so that I can more easily paste answers into the Q&A… which turned out to be a superset of the emacsconf-extract-subed-copy-section-text I mentioned in last year's notes and totally forgot about. This tells me that I need to add it to my EmacsConf - organizers-notebook notes so that I actually know what to do. I did not use my completion functions to add section headings based on comments either. Apparently this was emacsconf-extract-insert-note-with-question-heading, which I do have a note about in my organizer notebook.

Audio mixing was reasonable. I normalized the audio in Audacity, and I manually fixed some sections where some participants' audio volumes were lower.

Budget

Hosting a conference online continues to be pretty manageable. Here are our costs for the year (including taxes where applicable):

Make a CSV with the invoice data

(with-temp-file "~/proj/emacsconf/2025/all-invoices.csv"

(insert

"\"Description\",\"From\",\"To\",\"Quantity\",\"Region\",\"Unit Price\",\"Amount (USD)\",\"Tax (USD)\",\"Total (USD)\"\n"

(orgtbl-to-csv

(seq-mapcat

(lambda (file)

(seq-filter

(lambda (row)

(string-match "meet\\|front0\\|live0" (car row)))

(cdr (pcsv-parse-file file))))

(directory-files-recursively "~/proj/emacsconf/2025/invoices/"

"\\.csv"))

nil)))

Code for calculating BBB node costs

(append

'(("Node" "Jan" "Feb" "Mar" "Apr" "May" "Jun" "Jul" "Aug" "Sep" "Oct" "Nov" "Dec" "Total") hline)

(mapcar

(lambda (group)

(let ((amounts (mapcar

(lambda (file)

(format "%.2f"

(apply '+

(seq-keep (lambda (row)

(when (string-match (car group) (car row))

(string-to-number (elt row 8))))

(cdr (pcsv-parse-file file))))))

(directory-files (format "~/proj/emacsconf/2025/invoices/%s/" (cdr group))

t "\\.csv"))))

(append (list (car group))

amounts

(list (format "%.2f" (apply '+ (mapcar 'string-to-number amounts)))))))

'(("meet" . "sacha")

("front0" . "bandali")

("live0" . "bandali")))

nil

)

| Node | Jan | Feb | Mar | Apr | May | Jun | Jul | Aug | Sep | Oct | Nov | Dec | Total |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| meet | 2.17 | 7.55 | 6.78 | 6.74 | 7.13 | 6.95 | 7.19 | 7.27 | 6.75 | 7.19 | 7.56 | 14.02 | 87.30 |

| front0 | 5.00 | 5.00 | 5.00 | 5.00 | 5.00 | 5.00 | 5.00 | 5.00 | 5.00 | 5.00 | 5.00 | 18.79 | 73.79 |

| live0 | 5.00 | 5.00 | 5.00 | 5.00 | 5.00 | 5.00 | 5.00 | 5.00 | 5.00 | 5.00 | 5.00 | 32.89 | 87.89 |

Grand total for 2025: USD 248.98

Server configuration during the conference:

| Node | Specs | Hours upscaled | CPU load |

|---|---|---|---|

| meet | 16GB 8core shared | 52 | peak 172% CPU (100% is 1 CPU) |

| front | 32GB 8core shared | 47 | peak 47% CPU (100% is 1 CPU) |

| live | 64GB 16core shared | 48 | peak 440% CPU (100% is 1 CPU) |

| res | 46GB 12core | peak 60% total CPU (100% is 12 CPUs); each OBS ~3.5 CPUs), mem 7GB used | |

| media | 3GB 1core |

These hosting costs are a little higher than 2024 because we now pay for hosting the BigBlueButton server (meet) year-round. That's ~ 5 USD/month for a Linode 1 GB instance and an extra USD 2-3 / month that lets us provide a platform for OrgMeetup, Emacs APAC, and Emacs Berlin by alternating between a 1 GB Linode and an 8 GB Linode as needed. The meetups had previously been using the free Jitsi service, but sometimes that has some limitations. In 2023 and most of 2024, the BigBlueButton server had been provided by FossHost, which has since shut down. This time, maintaining the server year-round meant that we didn't have to do any last-minute scrambles to install and configure the machine, which I appreciated. I could potentially get the cost down further if I use Linode's custom images to create a node from a saved image right before a meetup, but I think that trades about USD 2 of savings/month for much more technical risk, so I'm fine with just leaving it running downscaled.

If we need to cut costs, live0 might be more of a candidate because I think we'll be able to use Ansible scripts to recreate the Icecast setup. I think we're fine, though. Also, based on the CPU peak loads, we might be able to get away with lower specs during the conference (maybe meet: 8 GB, front: 8 GB, live: 32 GB), for an estimated savings of USD 27.76, with the risk of having to worry about it if we hit the limit. So it's probably not a big deal for now.

I think people's donations through the Working Together program can cover the costs for this year, just like last year. (Thanks!) I just have to do some paperwork.

In addition to these servers, Ry P provided res.emacsconf.org for OBS streaming over VNC sessions. The Free Software Foundation also provided media.emacsconf.org for serving media files, and yang3 provided eu.media.emacsconf.org.

If other people are considering running an online conference, the hosting part is surprisingly manageable, at least for our tiny audience size of about 100 peak simultaneous viewers and 35 web conference participants. It was nice to make sure that everyone can watch without ads or Javascript.

Behind the scenes: In terms of keeping an eye on performance limits, we're usually more CPU-bound than memory- or disk-bound, so we had plenty of room this year. Now I have some Org Babel source blocks to automatically collect the stats from different servers. Here's what that Org block looks like:

#+begin_src sh :dir /ssh:res:/ :results verbatim :wrap example

top -b -n 1 | head

#+end_src

I should write something similar to grab the Icecast stats from http://live0.emacsconf.org:8001/status.xsl periodically. Curl will do the trick, of course, but maybe I can get Emacs to add a row to an Org Mode table.

Tracking my time

As part of my general interest in time-tracking, I tracked EmacsConf-related time separately from my general Emacs-related time.

Calculations

(append (list '("Year" "Month" "Hours") 'hline)

(seq-mapcat

(lambda (year)

(mapcar

(lambda (group)

(list

year

(car group)

(format "%.1f"

(apply '+

(mapcar (lambda (o) (/ (alist-get 'duration o) 3600.0))

(cdr group))))))

(seq-group-by (lambda (o)

(substring (alist-get 'date o) 5 7))

(quantified-records

(concat year "-01-01")

(concat year "-12-31")

"&filter_string=emacsconf&split=keep&order=oldest"))))

'("2024" "2025")))

| Year | Month | Hours |

|---|---|---|

| 2024 | 01 | 2.5 |

| 2024 | 07 | 2.8 |

| 2024 | 08 | 0.6 |

| 2024 | 09 | 7.0 |

| 2024 | 10 | 14.4 |

| 2024 | 11 | 30.7 |

| 2024 | 12 | 46.2 |

| 2025 | 01 | 9.7 |

| 2025 | 02 | 1.4 |

| 2025 | 04 | 0.9 |

| 2025 | 06 | 0.8 |

| 2025 | 07 | 6.0 |

| 2025 | 08 | 3.6 |

| 2025 | 09 | 10.9 |

| 2025 | 10 | 1.2 |

| 2025 | 11 | 21.0 |

| 2025 | 12 | 64.3 |

import pandas as pd

import matplotlib.pyplot as plt

df = pd.DataFrame(data[1:], columns=data[0])

df = pd.pivot_table(df, columns=['Month'], index=['Year'], values='Hours', aggfunc='sum', fill_value=0)

df = df.reindex(columns=range(1, 13), fill_value=0)

df['Total'] = df.sum(axis=1)

df = df.applymap(lambda x: f"{x:.1f}" if x != 0 else "")

return df

| Year | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | Total |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2024 | 2.5 | 2.8 | 0.6 | 7.0 | 14.4 | 30.7 | 46.2 | 104.2 | |||||

| 2025 | 9.7 | 1.4 | 0.9 | 0.8 | 6.0 | 3.6 | 10.9 | 1.2 | 21.0 | 64.3 | 119.8 |

This year, I spent more time doing the reencoding and captions in December, since most of the submissions came in around that time. I didn't even listen to all the videos in real time. I used my shortcuts to skim the captions and jump around. It would have been nice to be able to spread out the work a little bit more instead of squeezing most of it into the first week of December, since that would have made it easier to coordinate with other volunteers without feeling like I might have to do more last-minute scrambles if they were busier than expected.

I was a little bit nervous about making sure that the infrastructure was all going to be okay for the conference as well as double checking the videos for possible encoding issues or audio issues. Stress tends to make me gloss over things or make small mistakes, which meant I had to slow down even more to double-check things and add more things to our process documentation.

I'm glad it all worked out. Even if I switched that time to working on Emacs stuff myself, I don't think I would have been able to put together all the kinds of wonderful tips and experiences that other people shared in the conference, so that was worthwhile.

(Here's a longer-term analysis time going back to 2012.)

Thanks

- Thank you to all the speakers, volunteers, and participants, and to all those other people in our lives who make it possible through time and support.

- Thanks to other volunteers:

- Corwin and Amin for helping with the organization

- JC Helary, Triko, and James Endres Howell for help reviewing CFPs

- Amitav Krishna, Rodion Goritskov, jay_bird, and indra for captions

- yang3 for the EU mirror we're setting up

- Bhavin Gandhi, Michael Kokosenski, Iain Young, Jamie Cullen, Ihor Radchenko (yantar92), FlowyCoder for other help

- Thanks to the Free Software Foundation for the mailing lists, the media server, and of course, GNU Emacs.

- Thanks to Ry P for the server that we're using for OBS streaming and processing videos.

- Thanks to the many users and contributers and project teams that

create all the awesome free software we use, especially:

- Emacs, Org Mode, ERC, TRAMP, Magit, BigBlueButton, Etherpad, Ikiwiki, Icecast, OBS, TheLounge, libera.chat, ffmpeg, OpenAI Whisper, WhisperX, the aeneas forced alignment tool, PsiTransfer, subed, sub-seg, Mozilla Firefox, mpv, Tampermonkey

- And many, many other tools and services we used to prepare and host this years conference

- Thanks to shoshin for the music.

- Thanks to people who donated via the FSF Working Together program: Scott Ranby, Jonathan Mitchell, and 8 other anonymous donors!

Overall

I've already started hearing from people who enjoyed the conference and picked up lots of good tips from it. Wonderful!

EmacsConf 2025 was a bit more challenging this year. The world has gotten a lot busier. Return-to-work mandates, job market turmoil, health challenges, bigger societal changes… It's harder for people to find time. For example, the maintainers of Emacs and Org are too busy working on useful updates and bugfixes to rehash the news for us, so maybe I'll get back to doing Emacs News Highlights next year. I'm super lucky in that I can stand outside most of all of that and make this space where we can take a break and chat about Emacs. I really appreciated having this nice, cozy little place where people could get together and bump into other people who might be interested in similar things, either at the event itself or in the discussions afterwards. I'm glad we started with a large schedule and let things settle down. I'd rather have that slack instead of making speakers feel stressed or guilty.

I love the way that working on the various parts of the conference gives me an excuse to tinker with Emacs and figure out how to use it for more things.

As you can tell from the other sections in this post, I tend to have so much fun doing this that I often forget to check if I've already written the same functions before. When I do remember, I feel really good about being able to build on this accumulation of little improvements.

I didn't feel like I really got to attend EmacsConf this year, but that's not really surprising because I don't usually get to. I think the only time I've actually been able to take proper notes during EmacsConf itself was back in 2013. It's okay, I get to spend a little time with presentations before anyone else does, and there's always the time afterwards. Plus I can always reach out to the speakers myself. It might be nice to be able to just sit and enjoy it and ask questions. Maybe someday when I've automated enough to make this something that I can run easily even on my own.

So let's quickly sketch out some possible scenarios:

- I might need to do it on my own next year, in case other organizers get pulled away at the last minute: I think it's possible, especially if I can plan for some emergency moderation or last-minute volunteers. I had a good experience, despite the stress of juggling things live on stream. (One time, one of the speakers had a question for me, and he had to repeat it a few times before I found the right tab and unmuted.) Still, I think it would be great to do it again next year.

- Some of the other organizers might be more available: It would be nice to have people on screen handling the hosting. If people can help out with verifying the encoding, normalizing the audio, and shepherding videos through the process, that might let me free up some time to coordinate captions with other volunteers even for later submissions.

- I might get better at asking for help and making it easier for people to get involved: That would be pretty awesome. Sometimes it's hard for me to think about what and how, so if some people can take the initiative, that could be good.

This is good. It means that I can probably say yes to EmacsConf even if it's just me and whoever wants to share what they've been learning about Emacs this year. That's the basic level. We're still here, amazing! Anything else people can add to that is a welcome bonus. We'll make stone soup together.

EmacsConf doesn't have to be snazzy. We don't need to try to out-market VS Code or whatever other editors emerge over the next 10 years. We can just keep having fun and doing awesome things with Emacs. I love the way that this online conference lets people participate from all over the world. We like to focus on facilitating sharing and then capturing the videos, questions, answers so that people can keep learning from them afterwards. I'm looking forward to more of that next year.

I'd love to hear what people thought of it and see how we can make things better together. I'd love to swap notes with organizers of other conferences, too. What's it like behind the scenes?

My next steps are:

- Extract part of this into a report for the emacsconf.org website, like EmacsConf - 2024 - EmacsConf 2024 Report.

- Announce the resources and report on emacsconf-discuss.

- Catch up on the other parts of my life I've been postponing.

- Start thinking about EmacsConf 2026 =)