Quick notes on livestreaming to YouTube with FFmpeg on a Lenovo X230T

| video, youtube, streaming, ffmpeg, yay-emacs: Updated scripts

Text from the sketch

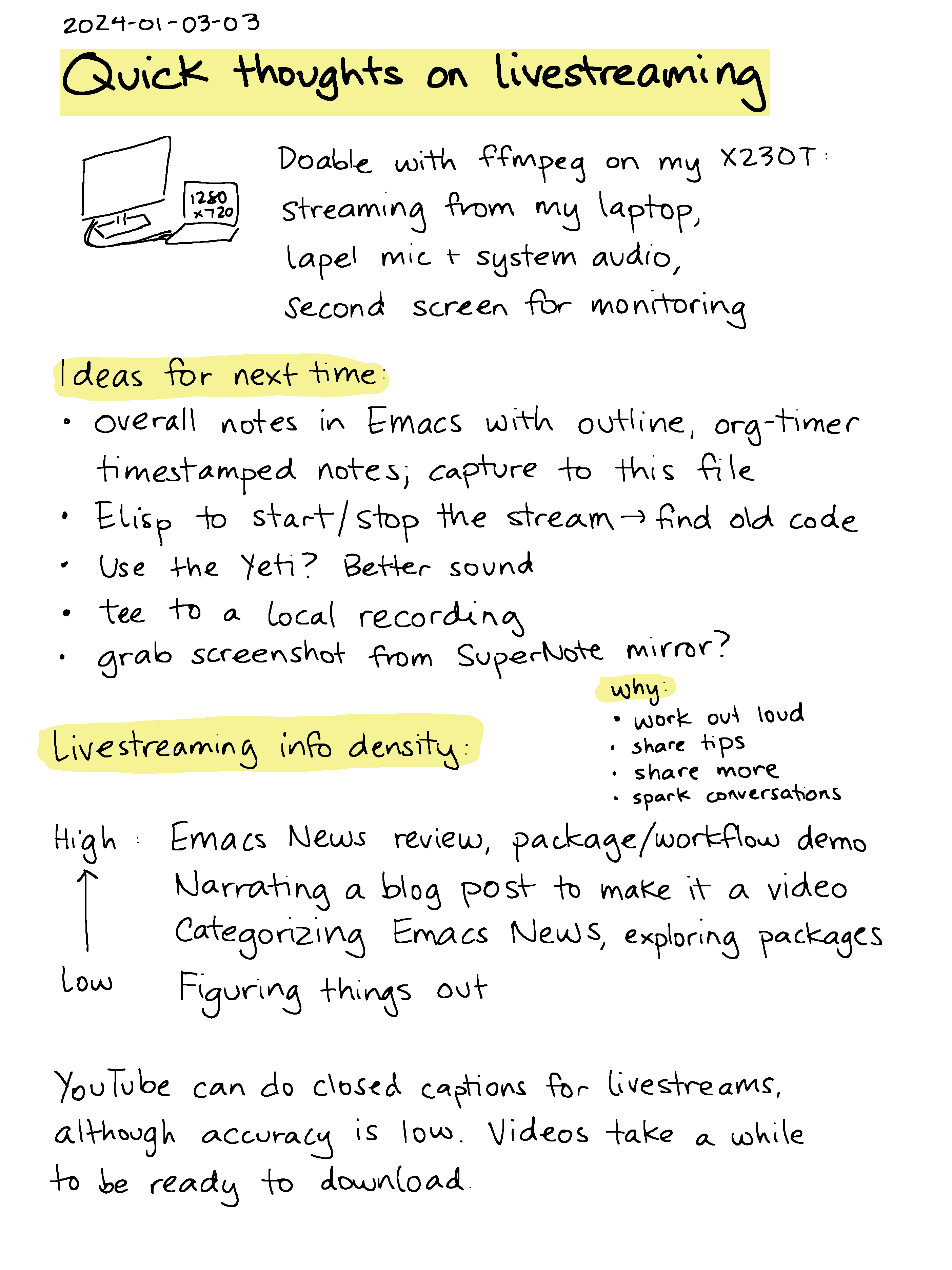

Quick thoughts on livestreaming

Why:

- work out loud

- share tips

- share more

- spark conversations

- (also get questions about things)

Doable with ffmpeg on my X230T:

- streaming from my laptop

- lapel mic + system audio,

- second screen for monitoring

Ideas for next time:

- Overall notes in Emacs with outline, org-timer timestamped notes; capture to this file

- Elisp to start/stop the stream → find old code

- Use the Yeti? Better sound

- tee to a local recording

- grab screenshot from SuperNote mirror?

Live streaming info density:

- High: Emacs News review, package/workflow demo

- Narrating a blog post to make it a video

- Categorizing Emacs News, exploring packages

- Low: Figuring things out

YouTube can do closed captions for livestreams, although accuracy is low. Videos take a while to be ready to download.

Experimenting with working out loud

I wanted to write a report on EmacsConf 2023 so that we could share it with speakers, volunteers, participants, donors, related organizations like the Free Software Foundation, and other communities. I experimented with livestreaming via YouTube while I worked on the conference highlights.

It's a little over an hour long and probably very boring, but it was nice of people to drop by and say hello.

The main parts are:

- 0:00: reading through other conference reports for inspiration

- 6:54: writing an overview of the talks

- 13:10: adding quotes for specific talks

- 25:00: writing about the overall conference

- 32:00: squeezing in more highlights

- 49:00: fiddling with the formatting and the export

It mostly worked out, aside from a brief moment of "uhhh, I'm looking at our private conf.org file on stream". Fortunately, the e-mail addresses that were showed were the public ones.

Technical details

Setup:

I set up environment variables and screen resolution:

# From pacmd list-sources | egrep '^\s+name' LAPEL=alsa_input.usb-Jieli_Technology_USB_Composite_Device_433035383239312E-00.mono-fallback # YETI=alsa_input.usb-Blue_Microphones_Yeti_Stereo_Microphone_REV8-00.analog-stereo SYSTEM=alsa_output.pci-0000_00_1b.0.analog-stereo.monitor # MIC=$LAPEL # AUDIO_WEIGHTS="1 1" MIC=$YETI AUDIO_WEIGHTS="0.5 0.5" OFFSET=+1920,430 SIZE=1280x720 SCREEN=LVDS-1 # from xrandr xrandr --output $SCREEN --mode 1280x720

- I switch to a larger size and a light theme. I also turn consult previews off to minimize the risk of leaking data through buffer previews.

my-emacsconf-prepare-for-screenshots: Set the resolution, change to a light theme, and make the text bigger.

(defun my-emacsconf-prepare-for-screenshots () (interactive) (shell-command "xrandr --output LVDS-1 --mode 1280x720") (modus-themes-load-theme 'modus-operandi) (my-hl-sexp-update-overlay) (set-face-attribute 'default nil :height 170) (keycast-mode))

Testing:

ffmpeg -f x11grab -video_size $SIZE -i :0.0$OFFSET -y /tmp/test.png; display /tmp/test.png ffmpeg -f pulse -i $MIC -f pulse -i $SYSTEM -filter_complex amix=inputs=2:weights=$AUDIO_WEIGHTS:duration=longest:normalize=0 -y /tmp/test.mp3; mpv /tmp/test.mp3 DATE=$(date "+%Y-%m-%d-%H-%M-%S") ffmpeg -f x11grab -framerate 30 -video_size $SIZE -i :0.0$OFFSET -f pulse -i $MIC -f pulse -i $SYSTEM -filter_complex "amix=inputs=2:weights=$AUDIO_WEIGHTS:duration=longest:normalize=0" -c:v libx264 -preset fast -maxrate 690k -bufsize 2000k -g 60 -vf format=yuv420p -c:a aac -b:a 96k -y -flags +global_header "/home/sacha/recordings/$DATE.flv" -f flv

Streaming:

DATE=$(date "+%Y-%m-%d-%H-%M-%S") ffmpeg -f x11grab -framerate 30 -video_size $SIZE -i :0.0$OFFSET -f pulse -i $MIC -f pulse -i $SYSTEM -filter_complex "amix=inputs=2:weights=$AUDIO_WEIGHTS:duration=longest:normalize=0[audio]" -c:v libx264 -preset fast -maxrate 690k -bufsize 2000k -g 60 -vf format=yuv420p -c:a aac -b:a 96k -y -f tee -map 0:v -map '[audio]' -flags +global_header "/home/sacha/recordings/$DATE.flv|[f=flv]rtmp://a.rtmp.youtube.com/live2/$YOUTUBE_KEY"

To restore my previous setup:

my-emacsconf-back-to-normal: Go back to a more regular setup.

(defun my-emacsconf-back-to-normal () (interactive) (shell-command "xrandr --output LVDS-1 --mode 1366x768") (modus-themes-load-theme 'modus-vivendi) (my-hl-sexp-update-overlay) (set-face-attribute 'default nil :height 115) (keycast-mode -1))

Ideas for next steps

I can think of a few workflow tweaks that might be fun:

- a stream notes buffer on the right side of the screen for context

information, timestamped notes to make editing/review easier (maybe

using

org-timer), etc. I experimented with some streaming-related code in my config, so I can dust that off and see what that's like. I also want to have anorg-capturetemplate for it so that I can add notes from anywhere. - a quick way to add a screenshot from my Supernote to my Org files

I think I'll try going through an informal presentation or Emacs News as my next livestream experiment, since that's probably higher information density.