2026-01-05 Emacs news

Posted: - Modified: | emacs, emacs-news: Fixed link to Por qué elegí emacs como mi editor avanzado (2ª parte)

Looking for something to write about? Christian Tietze is hosting the January Emacs Carnival on the theme "This Year, I'll…". Check out last month's carnival on The People of Emacs for other entries.

- Upcoming events (iCal file, Org):

- EmacsATX: Emacs Social https://www.meetup.com/emacsatx/events/312313428/ Thu Jan 8 1600 America/Vancouver - 1800 America/Chicago - 1900 America/Toronto – Fri Jan 9 0000 Etc/GMT - 0100 Europe/Berlin - 0530 Asia/Kolkata - 0800 Asia/Singapore

- Atelier Emacs Montpellier (in person) https://lebib.org/date/atelier-emacs Fri Jan 9 1800 Europe/Paris

- London Emacs (in person): Emacs London meetup https://www.meetup.com/london-emacs-hacking/events/312727757/ Tue Jan 13 1800 Europe/London

- OrgMeetup (virtual) https://orgmode.org/worg/orgmeetup.html Wed Jan 14 0800 America/Vancouver - 1000 America/Chicago - 1100 America/Toronto - 1600 Etc/GMT - 1700 Europe/Berlin - 2130 Asia/Kolkata – Thu Jan 15 0000 Asia/Singapore

- EmacsSF (in person): coffee.el in SF https://www.meetup.com/emacs-sf/events/312735622/ Sat Jan 17 1100 America/Los_Angeles

- Beginner:

- Emacs configuration:

- Emacs Lisp:

- visual-shorthands.el: Purely visual prefix abbreviations (+ thanks to the community) (Reddit)

- n.el - Emacs Lisp function for adding time (@zyd@yap.zyd.lol)

- Sacha Chua: Emacs Lisp: Making a multi-part form PUT or POST using url-retrieve-synchronously and mm-url-encode-multipart-form-data

- [15] Emacs Reader: Progress on Text Selection & Fixing Some Segfaults - 1/4/2026, 3:30:28 PM - Dyne.org TV

- Appearance:

- What do your modelines look like, and how much information is too much?

- Variable-width Serif Fonts when editing plain text in Emacs (@gmoretti@mastodon.social)

- r-b-g-b/emacs-physical-font-size: Keep the Emacs default face at a consistent physical size across monitors (@rbgb@mastodon.sdf.org)

- Protesilaos Stavrou: Emacs: modus-themes version 5.2.0

- "I" created a Stranger things color theme (Reddit, lobste.rs)

- Protesilaos Stavrou: Emacs: ef-orange and ef-fig are part of the ef-themes

- fourier/terminal-green-theme: Emacs Terminal Green color theme - Codeberg.org (@fourier@functional.cafe)

- Navigation:

- Dired:

- Writing:

- Org Mode:

- Chris Maiorana: An Org Mode Deep Work Agenda for 2026

- My Productivity System for 2026 is Boring on Purpose – Curtis McHale (@curtismchale@mastodon.social)

- Tip about using org-timer-item and org-insert-item to keep track of relative timestamps

- org-repeat-by-cron.el: An Org mode task repeater based on Cron expressions (Reddit)

- Refiling to a subset of targets from org-capture (@malcolm@mastodon.social)

- Sacha Chua: Using whisper.el to convert speech to text and save it to the currently clocked task in Org Mode or elsewhere

- Trevoke/org-gtd.el: v4.0.0 - better integration, directed acyclic graphs, DSL for agendas (Reddit)

- (Update) org-headline-card 0.3: SVG rendering engine boosts performance and visual Effects (r/orgmode)

- bradmont/org-roam-tree: organize backlinks in the org-roam buffer as a tree (Reddit)

- Org Social 1.6: location, birthday, language, pinned; this is the final version planned

- Org parser and webapp (for reading) (Reddit) - Python

- Import, export, and integration:

- Completion:

- Coding:

- Integrating IRB (Ruby REPL) into Emacs (01:11)

- gongo/emacs-riscv (HN)

- Zellij Revisited: Crafting Project Layouts - System Crafters Live! (59:56)

- dns-mode: handy for editing zone files, even incrementing serial numbers on save (@pipes@fosstodon.org)

- DamianB-BitFlipper/magit-pre-commit.el: Emacs: pre-commit integration for Magit. (Reddit)

- Marcin Borkowski: Magit and new branch length

- Shells:

- Web:

- Doom Emacs:

- Fun:

- AI:

- Community:

- Fortnightly Tips, Tricks, and Questions — 2025-12-30 / week 52

- Christian Tietze: Emacs Carnival 2026-01: “This Year, I’ll …”

- A person of emacs: acdw (@eludom@fosstodon.org)

- Amin Bandali: The People of Emacs (Reddit)

- Christian Tietze: The People Who Got Me into Emacs

- Sacha Chua: J'apprécie les gens d'Emacs / I appreciate the people of Emacs

- Entering the Church of Emacs - mauromotion.com (@mauro@mograph.social)

- Alvaro Ramirez: My 2025 review as an indie dev

- Some 2025 thankyous and the big 2026 lisp plan (@screwlisp@gamerplus.org)

- ReLifeBook, Будни ретрокомпьютерщика, Emacs Lisp и FPGA: подведение итогов моей хобби-деятельности / Хабр (@habr@zhub.link)

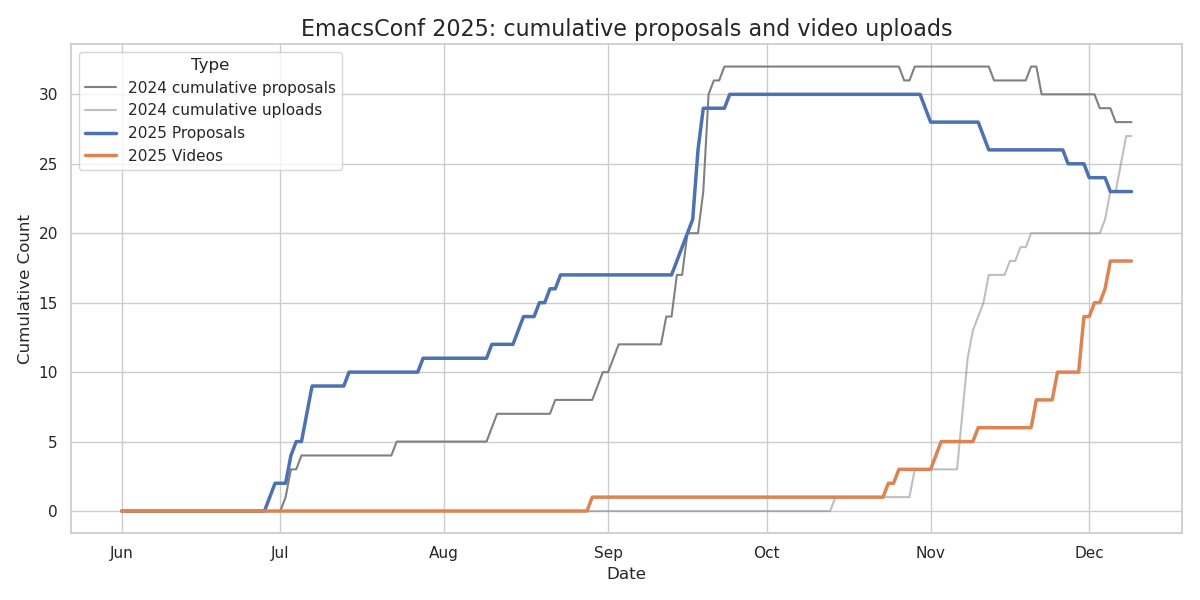

- Sacha Chua: EmacsConf 2025 notes

- Other:

- "Deleting blank links in a document today", a poem

- DarkBuffalo/arbo.el: Graphical Project Tree Generator for Emacs (@DarkBuffalo@mastodon.social)

- 3 Minute Guide to Emacs Autoinsert Mode #coding #programming #org (02:35)

- [ANN] shift-number now incorporates evil-numbers

- (Update) grid-table 0.2: Built-in chart formulas + chart viewer (C-c v) (Reddit)

- Kana: Extensibility: The "100% Lisp" Fallacy (HN, lobste.rs)

- Emacs on kindle

- The Art of Text (rendering) (Reddit)

- Emacs development:

- New packages:

- ai-code: Unified interface for AI coding CLI tool such as Codex, Copilot CLI, Opencode, Grok CLI, etc (MELPA)

- eglot-python-preset: Eglot preset for Python (MELPA)

- evil-tex-ts: Tree-sitter based LaTeX text objects for Evil (MELPA)

- go-template-helper-mode: Overlay Go template highlighting (MELPA)

- gptel-cpp-complete: GPTel-powered C++ completion (MELPA)

- org-roam-timeline: Visual timeline for Org-Roam nodes (MELPA)

- pass-coffin: Interface to "pass coffin" (MELPA)

- porg: Bring org-mode features to any prog mode (MELPA)

- scad-ts-mode: Tree-sitter support for OpenSCAD (MELPA)

- tmpl-mode: Minor mode for "tmpl" template files (MELPA)

- visual-shorthands: Visual abbreviations for symbol prefixes (MELPA)

- vulpea-ui: Sidebar infrastructure and widget framework for vulpea notes (MELPA)

Links from reddit.com/r/emacs, r/orgmode, r/spacemacs, Mastodon #emacs, Bluesky #emacs, Hacker News, lobste.rs, programming.dev, lemmy.world, lemmy.ml, planet.emacslife.com, YouTube, the Emacs NEWS file, Emacs Calendar, and emacs-devel. Thanks to Andrés Ramírez for emacs-devel links. Do you have an Emacs-related link or announcement? Please e-mail me at sacha@sachachua.com. Thank you!