[2023-01-14 Sat]: Removed my fork since upstream now has the :eval function.

The Q&A session for Things I'd like to see in Emacs (Richard Stallman) from EmacsConf 2022 was done over Mumble. Amin pasted the questions into the Mumble chat buffer and I copied them into a larger buffer as the speaker answered them, but I didn't do it consistently. I figured it might be worth making another video with easier-to-read visuals. At first, I thought about using LaTeX to create Beamer slides with the question text, which I could then turn into a video using ffmpeg. Then I decided to figure out how to animate the text in Emacs, because why not? I figured a straightforward typing animation would probably be less distracting than animate-string, and emacs-director seems to handle that nicely. I forked it to add a few things I wanted, like variables to make the typing speed slower (so that it could more reliably type things on my old laptop, since sometimes the timers seemed to have hiccups) and an :eval step for running things without needing to log them. (2023-01-14: Upstream has the :eval feature now.)

To make it easy to synchronize the resulting animation with the chapter markers I derived from the transcript of the audio file, I decided to beep between scenes. First step: make a beep file.

ffmpeg -y -f lavfi -i 'sine=frequency=1000:duration=0.1' beep.wav

Next, I animated the text, with a beep between scenes. I used

subed-parse-file to read the question text directly from the chapter

markers, and I used simplescreenrecorder to set up the recording

settings (including audio).

(defun my-beep ()

(interactive)

(save-window-excursion

(shell-command "aplay ~/recordings/beep.wav &" nil nil)))

(require 'director)

(defvar emacsconf-recording-process nil)

(shell-command "xdotool getwindowfocus windowsize 1282 720")

(progn

(switch-to-buffer (get-buffer-create "*Questions*"))

(erase-buffer)

(org-mode)

(face-remap-add-relative 'default :height 300)

(setq-local mode-line-format " Q&A for EmacsConf 2022: What I'd like to see in Emacs (Richard M. Stallman) - emacsconf.org/2022/talks/rms")

(sit-for 3)

(delete-other-windows)

(hl-line-mode -1)

(when (process-live-p emacsconf-recording-process) (kill-process emacsconf-recording-process))

(setq emacsconf-recording-process (start-process "ssr" (get-buffer-create "*ssr*")

"simplescreenrecorder"

"--start-recording"

"--start-hidden"))

(sit-for 3)

(director-run

:version 1

:log-target '(file . "/tmp/director.log")

:before-start

(lambda ()

(switch-to-buffer (get-buffer-create "*Questions*"))

(delete-other-windows))

:steps

(let ((subtitles (subed-parse-file "~/proj/emacsconf/rms/emacsconf-2022-rms--what-id-like-to-see-in-emacs--answers--chapters.vtt")))

(apply #'append

(list

(list :eval '(my-beep))

(list :type "* Q&A for Richard Stallman's EmacsConf 2022 talk: What I'd like to see in Emacs\nhttps://emacsconf.org/2022/talks/rms\n\n"))

(mapcar

(lambda (sub)

(list

(list :log (elt sub 3))

(list :eval '(progn (org-end-of-subtree)

(unless (bolp) (insert "\n"))))

(list :type (concat "** " (elt sub 3) "\n\n"))

(list :eval '(org-back-to-heading))

(list :wait 5)

(list :eval '(my-beep))))

subtitles)))

:typing-style 'human

:delay-between-steps 0

:after-end (lambda ()

(process-send-string emacsconf-recording-process "record-save\nwindow-show\nquit\n"))

:on-failure (lambda ()

(process-send-string emacsconf-recording-process "record-save\nwindow-show\nquit\n"))

:on-error (lambda ()

(process-send-string emacsconf-recording-process "record-save\nwindow-show\nquit\n"))))

I used the following code to copy the latest recording to animation.webm and extract the audio to animation.wav. my-latest-file and my-recordings-dir are in my Emacs config.

(let ((name "animation.webm"))

(copy-file (my-latest-file my-recordings-dir) name t)

(shell-command

(format "ffmpeg -y -i %s -ar 8000 -ac 1 %s.wav"

(shell-quote-argument name)

(shell-quote-argument (file-name-sans-extension name)))))

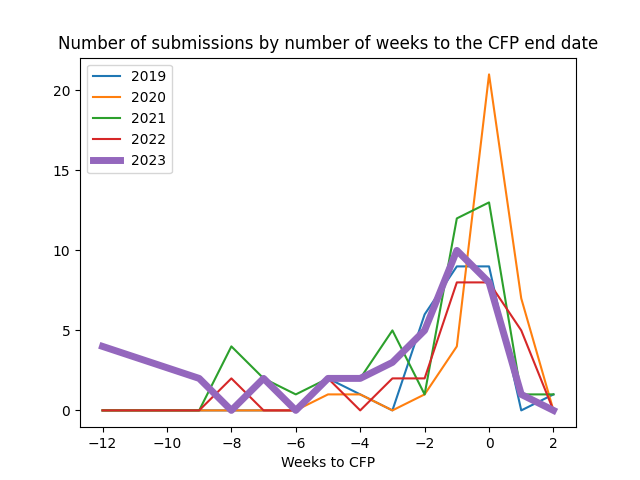

Then I needed to get the timestamps of the beeps in the recording. I subtracted a little bit (0.82 seconds) based on comparing the waveform with the results.

filename = "animation.wav"

from scipy.io import wavfile

from scipy import signal

import numpy as np

import re

rate, source = wavfile.read(filename)

peaks = signal.find_peaks(source, height=1000, distance=1000)

base_times = (peaks[0] / rate) - 0.82

print(base_times)

I noticed that the first question didn't seem to get beeped properly, so I tweaked the times. Then I wrote some code to generate a very long ffmpeg command that used trim and tpad to select the segments and extend them to the right durations. There was some drift when I did it without the audio track, but the timestamps seemed to work right when I included the Q&A audio track as well.

import webvtt

import subprocess

chapters_filename = "emacsconf-2022-rms--what-id-like-to-see-in-emacs--answers--chapters.vtt"

answers_filename = "answers.wav"

animation_filename = "animation.webm"

def get_length(filename):

result = subprocess.run(["ffprobe", "-v", "error", "-show_entries",

"format=duration", "-of",

"default=noprint_wrappers=1:nokey=1", filename],

stdout=subprocess.PIPE,

stderr=subprocess.STDOUT)

return float(result.stdout)

def get_frames(filename):

result = subprocess.run(["ffprobe", "-v", "error", "-select_streams", "v:0", "-count_packets",

"-show_entries", "stream=nb_read_packets", "-of",

"csv=p=0", filename],

stdout=subprocess.PIPE,

stderr=subprocess.STDOUT)

return float(result.stdout)

answers_length = get_length(answers_filename)

times = np.asarray([ 1.515875, 13.50, 52.32125 , 81.368625, 116.66625 , 146.023125,

161.904875, 182.820875, 209.92125 , 226.51525 , 247.93875 ,

260.971 , 270.87375 , 278.23325 , 303.166875, 327.44925 ,

351.616375, 372.39525 , 394.246625, 409.36325 , 420.527875,

431.854 , 440.608625, 473.86825 , 488.539 , 518.751875,

544.1515 , 555.006 , 576.89225 , 598.157375, 627.795125,

647.187125, 661.10875 , 695.87175 , 709.750125, 717.359875])

fps = 30.0

times = np.append(times, get_length(animation_filename))

anim_spans = list(zip(times[:-1], times[1:]))

chapters = webvtt.read(chapters_filename)

if chapters[0].start_in_seconds == 0:

vtt_times = [[c.start_in_seconds, c.text] for c in chapters]

else:

vtt_times = [[0, "Introduction"]] + [[c.start_in_seconds, c.text] for c in chapters]

vtt_times = vtt_times + [[answers_length, "End"]]

vtt_times = [[x[0][0], x[1][0], x[0][1]] for x in zip(vtt_times[:-1], vtt_times[1:])]

test_rate = 1.0

i = 0

concat_list = ""

groups = list(zip(anim_spans, vtt_times))

import ffmpeg

animation = ffmpeg.input('animation.webm').video

audio = ffmpeg.input('rms.opus')

for_overlay = ffmpeg.input('color=color=black:size=1280x720:d=%f' % answers_length, f='lavfi')

params = {"b:v": "1k", "vcodec": "libvpx", "r": "30", "crf": "63"}

test_limit = 1

params = {"vcodec": "libvpx", "r": "30", "copyts": None, "b:v": "1M", "crf": 24}

test_limit = 0

anim_rate = 1

import math

cursor = 0

if test_limit > 0:

groups = groups[0:test_limit]

clips = []

for anim, vtt in groups:

padding = vtt[1] - cursor - (anim[1] - anim[0]) / anim_rate

if (padding < 0):

print("Squeezing", math.floor((anim[1] - anim[0]) / (anim_rate * 1.0)), 'into', vtt[1] - cursor, padding)

clips.append(animation.trim(start=anim[0], end=anim[1]).setpts('PTS-STARTPTS'))

elif padding == 0:

clips.append(animation.trim(start=anim[0], end=anim[1]).setpts('PTS-STARTPTS'))

else:

print("%f to %f: Padding %f into %f - pad: %f" % (cursor, vtt[1], (anim[1] - anim[0]) / (anim_rate * 1.0), vtt[1] - cursor, padding))

cursor = cursor + padding + (anim[1] - anim[0]) / anim_rate

clips.append(animation.trim(start=anim[0], end=anim[1]).setpts('PTS-STARTPTS').filter('tpad', stop_mode="clone", stop_duration=padding))

for_overlay = for_overlay.overlay(animation.trim(start=anim[0], end=anim[1]).setpts('PTS-STARTPTS+%f' % vtt[0]))

clips.append(audio.filter('atrim', start=vtt[0], end=vtt[1]).filter('asetpts', 'PTS-STARTPTS'))

args = ffmpeg.concat(*clips, v=1, a=1).output('output.webm', **params).overwrite_output().compile()

print(' '.join(f'"{item}"' for item in args))

Anyway, it's here for future reference. =)